GEMPACK manual

Mark Horridge, Michael Jerie, Dean Mustakinov, Florian Schiffmann

31 Dec 2024

ISBN 978-1-921654-90-9

This online version of the GEMPACK documentation is designed for effective searching and navigation within your browser. You can click on on any blue link to jump to the indicated place, and use the Back button to return. Use the middle mouse button to open a link in a new tab. A yellow panel is visible at top right, with links to the Contents page and Index. To find information on a particular topic, go to the Index first. You can also use your browser's Edit..Find command to search through the entire document.

You can use your browser's View menu to adjust the text size. Or, using your keyboard, press CTRL and + or CTRL and - to zoom in or out. If your mouse has a wheel, hold down the CTRL key, and then scroll the wheel to zoom in or out. You may need to wait a second or two to see the effect.

This manual refers to GEMPACK Release 12.2 and later — but will be useful too for earlier releases.

Each section or subsection has a "topic ID" which appears in green at the end of section headings. GEMPACK programs may emit warning or error messages that refer you to sections of this manual. The references may include section numbers, section titles and topic IDs, but the topic ID is most likely to remain unchanged through successive revisions of the manual.

If you want to print out sections, use the matching PDF file, GPmanual.pdf.

We thank Ken Pearson and Jill Harrison who wrote large parts of previous GEMPACK manuals; much of their work is incorporated here.

A condition of your GEMPACK licence is that acknowledgement of GEMPACK must be made when reporting results that have been obtained with GEMPACK. To cite this manual, use:

Horridge J.M., Jerie M., Mustakinov D. & Schiffmann F. (2024), GEMPACK manual, GEMPACK Software, Centre of Policy Studies, Victoria University, Melbourne, ISBN 978-1-921654-34-3, https://ideas.repec.org/p/cop/wpaper/gpman.html

Other GEMPACK-related citations are suggested in section 1.7.

Brief Table of Contents

- 1 Introduction [gpd1.1]

- 2 Installing GEMPACK on Windows PCs [gpd6.1]

- 3 How to carry out simulations with models [gpd1.2]

- 4 Building or modifying models [gpd1.3]

- 5 Header Array files [harfiles]

- 6 Constructing HAR files [gpd1.4]

- 7 GEMPACK file types, names and suffixes [gpd1.5.8]

- 8 Overview of running TABLO and the TABLO language [gpd2.1]

- 9 Additional information about running TABLO [gpd2.2]

- 10 The TABLO language: basic syntax [gpd2.3]

- 11 Syntax and semantic details [gpd2.4]

- 12 TABLO statements for post-simulation processing [gpd5.2]

- 13 Ranking sets via data (finding winners and losers) [gpd5.3]

- 14 Condensing models [condense]

- 15 Verifying economic models [gpd2.6]

- 16 Intertemporal models [gpd2.7]

- 17 Less obvious examples of the TABLO language [gpd2.8]

- 18 Linearizing levels equations [gpd2.9]

- 19 Overview of simulation reference chapters 20 to 35 [gpd3.1]

- 20 Command (CMF) files [gpd3.2]

- 21 TABLO input files and auxiliary files [gpd3.3]

- 22 CMF statements for data files, updated data files and display files [gpd3.4]

- 23 Specifying the closure [gpd3.5.1a]

- 24 Specifying the shocks [gpd3.5.5]

- 25 Actions in GEMSIM and TABLO-generated programs [gpd3.6]

- 26 Multi-step solution methods [gpd3.7]

- 27 Solution (SL4) files [gpd3.8]

- 28 SLC, UDC, AVC and CVL files [slcfiles]

- 29 Subtotals via GEMSIM or TABLO-generated programs [gpd3.11]

- 30 Solving models and simulation time [gpd3.12]

- 31 Solving a model in parallel on a machine with two or more processors [gpd9.2]

- 32 Memory management [gpd3.13]

- 33 Options for GEMSIM and TABLO-generated programs [gpd3.14]

- 34 Run-time errors [gpd3.15]

- 35 Summary of command file statements [gpd3.18]

- 36 GEMPACK Windows programs [gpd4.2]

- 37 Command-line programs for working with header array files [gpd4.4]

- 38 Syntax of GEMPACK text data files [gpd4.6]

- 39 SLTOHT: processing simulation results [gpd4.8]

- 40 SLTOHT for spreadsheets [gpd4.9]

- 41 ACCUM and DEVIA : accumulation and differences [gpd4.10]

- 42 Hands-on tutorials for models supplied with GEMPACK [gpd8.1]

- 43 Getting started with GEMPACK via WinGEM [gpd8.2]

- 44 Command prompt: hands-on computing [gpd8.3]

- 45 Using RunGEM for simulations [gpd8.5]

- 46 Using AnalyseGE to analyse simulation results [gpd8.6]

- 47 Print edition ends here [endprint]

- 48 Working with GEMPACK command-line programs [gpd1.5]

- 49 Miscellaneous information [miscstuff]

- 50 Code options when running TABLO [gpd2.5]

- 51 Simulations for models with complementarities [gpd3.16]

- 52 Subtotals with complementarity statements [gpd5.7]

- 53 More examples of post-simulation processing [postsim2]

- 54 Using MODHAR to create or modify header array files [gpd4.3]

- 55 Ordering of variables and equations in solution and equation files [ordering]

- 56 SEENV: to see the closure on an environment file [gpd4.12]

- 57 Automated homogeneity testing [autohomog]

- 58 Several simultaneous Johansen simulations via SAGEM [gpd3.10]

- 59 Equations files and LU files [gpd3.9]

- 60 Example models supplied with GEMPACK [models]

- 61 Pivoting, memory-sharing, and other solution strategies [pivots]

- 62 Limited executable-image size limits [exelimits]

- 63 Some technical details of GEMPACK [technical]

- 64 Rarely used features of GEMPACK programs [legacy]

- 65 Choosing sets of variables interactively [gpd4.17]

- 66 Older ways to choose closure and shocks [oldshkclos]

- 67 Improving your TAB and CMF files [better]

- 68 Shock statements designed for use with RunDynam [rdynshoks]

- 69 GEMPACK on Linux, Unix or Mac OS X [unix]

- 70 TEXTBI : extracting TAB, STI and CMF files from AXT, SL4 and CVL files [gpd4.14]

- 71 History of GEMPACK [oldnewfeat]

- 72 TABLO-generated programs and GEMSIM - timing comparison [gpd8.4]

- 73 Fortran compilers for Source-code GEMPACK [fortrans]

- 74 LTG variants and compiler options [gpd6.7.5a]

- 75 Fine print about header array files [gpd4.5]

- 76 Converting binary files: LF90/F77L3 to/from Intel/LF95/GFortran [gpd4.15]

- 77 Translation between GEMPACK and GAMS data files [gdxhar]

- 78 Recent GEMPACK with older RunGTAP or RunDynam [gpd5.12]

- 79 SUMEQ: information from equations files [gpd4.13]

- 80 Older GEMPACK documents [gemdocs]

- 81 References [references]

- 82 Index [index]

- 83 End of document [docend]

Detailed Table of Contents

- 1 Introduction [gpd1.1]

- 1.1 Organization of this manual [manualoutline]

- 1.2 Using this manual [usingmanual]

- 1.2.1 For experienced GEMPACK users [gpd1.1.5.2]

- 1.2.2 For new GEMPACK users -- getting started [gpd1.1.5.1]

- 1.3 Supported Operating Systems [supportedos]

- 1.4 The GEMPACK programs [gpd9.1.5]

- 1.4.1 The original command-line programs [gpd9.1.5.1]

- 1.4.2 The Windows programs [gpd9.1.5.2]

- 1.4.3 TABLO-generated programs [gpd1.1.7.1]

- 1.5 Models supplied with GEMPACK [gpd1.1.8]

- 1.6 Different versions of GEMPACK and associated licences [gpd1.1.9]

- 1.6.1 Source-code versions and licences [gpd1.1.9.1]

- 1.6.2 Unlimited executable-image version and licence [gpd1.1.9.3]

- 1.6.3 Limited executable-image version and licence [gpd1.1.9.2]

- 1.6.4 Using Exe-image and Source-code GEMPACK together [gpeisc]

- 1.6.5 When is a licence needed [gpd1.1.9.7]

- 1.6.6 Introductory licence [gpd1.1.9.5]

- 1.7 Citing GEMPACK [citinggempack]

- 1.8 Communicating with GEMPACK [gpd1.1.2]

- 1.8.1 GEMPACK web site [gpd1.1.3]

- 1.8.2 GEMPACK-L mailing list [gpd1.1.4]

- 1.9 Training Courses [training]

- 1.10 Acknowledgments [ackintro]

- 2 Installing GEMPACK on Windows PCs [gpd6.1]

- 2.1 Preparing to install GEMPACK [gpd6.2]

- 2.1.1 System requirements [gpd6.2.1]

- 2.1.2 Your GEMPACK licence file [gplic]

- 2.1.3 Where to install [instdir]

- 2.1.4 Notes for IT support [itsupport]

- 2.1.5 [Source-code only] Testing the Fortran installation [gpd6.3.3]

- 2.1.6 Configure Anti-virus programs [antivirus]

- 2.2 Installing GEMPACK [gpd6.4]

- 2.2.1 If a warning appears after installing [pcawarning]

- 2.2.2 [Source-code only] If an error occurs [gpd6.4.1.1]

- 2.2.3 Some GEMPACK licences require activation [activation]

- 2.2.4 GEMPACK licence [gpd6.4.4]

- 2.2.5 Changes to your PATH and Environment [gpd6.4.4a]

- 2.2.6 System environment settings for performance [mathlibperf]

- 2.3 Testing the Installation [gpd6.5]

- 2.4 [Source-code only] Re-Testing the Installation [gpd6.5s]

- 2.5 If you still have problems [witsend]

- 2.6 More simulations to test GEMPACK and WinGEM [gpd6.5.3]

- 2.7 Working with GEMPACK [gpd6.6]

- 2.7.1 New model's directory location [gpd6.6.2]

- 2.7.2 [Source-code only] GEMSIM or TABLO-generated programs ? [gpd6.6.3]

- 2.7.3 Text editor [gpd6.6.4]

- 2.7.4 If you installed in a new directory (not C:\GP) [gpd6.4.3]

- 2.7.5 If a program runs out of memory [gpd6.6.5]

- 2.7.6 Copying GEMPACK programs to other PCs [gpd6.6.9]

- 2.8 Manually setting the PATH and GPDIR [gpd6.4.2]

- 2.8.1 Checking and setting PATH and GPDIR [gpd6.4.2.1]

- 2.8.2 Trouble-shooting environment variables [envvartrouble]

- 2.9 Technical Topics [gpd6.7]

- 2.9.1 [Source-code only] Running BuildGP [gpd6.7.3]

- 2.9.2 File association [gpd6.6.7]

- 2.9.3 Keep and Temporary directories and GEMPACK Windows programs [gpd6.6.8]

- 2.9.4 Installing GEMPACK on a network [gpd6.8]

- 2.9.5 Uninstalling GEMPACK [gpd6.7.6]

- 2.9.6 Finding GEMPACK Version and Release Information [identver]

- 2.1 Preparing to install GEMPACK [gpd6.2]

- 3 How to carry out simulations with models [gpd1.2]

- 3.1 An example simulation with stylized Johansen [gpd1.2.1]

- 3.1.1 Introduction to the stylized Johansen model [gpd1.2.1.1]

- 3.1.2 The simulation [gpd1.2.1.2]

- 3.2 Preparing a directory for model SJ [gpd1.2.4.2]

- 3.3 Using GEMPACK: WinGEM or command prompt? [gpd1.2.3]

- 3.4 Stylized Johansen example simulation [gpd1.2.4]

- 3.4.1 Starting WinGEM [gpd1.2.4.1]

- 3.4.2 Setting the working directory [gpd1.2.4.3]

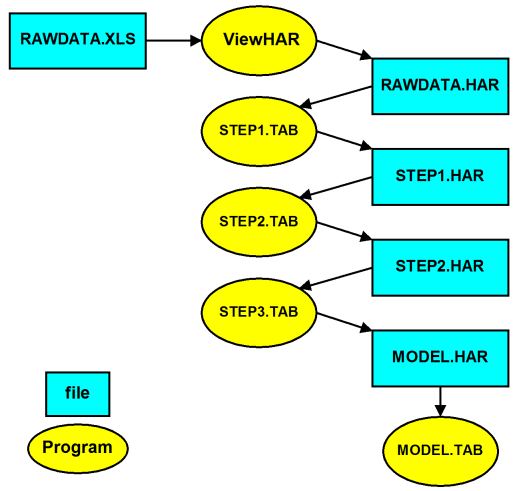

- 3.4.3 Looking at the data directly using ViewHAR [gpd1.2.4.4]

- 3.5 Implementing and running model SJ [gpd1.impsj]

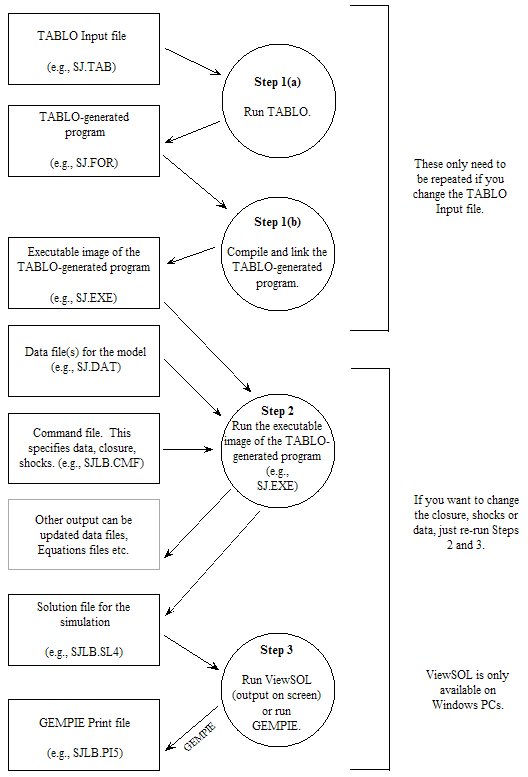

- 3.5.1 TABLO-generated program or GEMSIM? [gpd1.2.4.5]

- 3.5.2 Source-code method: using a TABLO-generated program [gpd1.2.4.6]

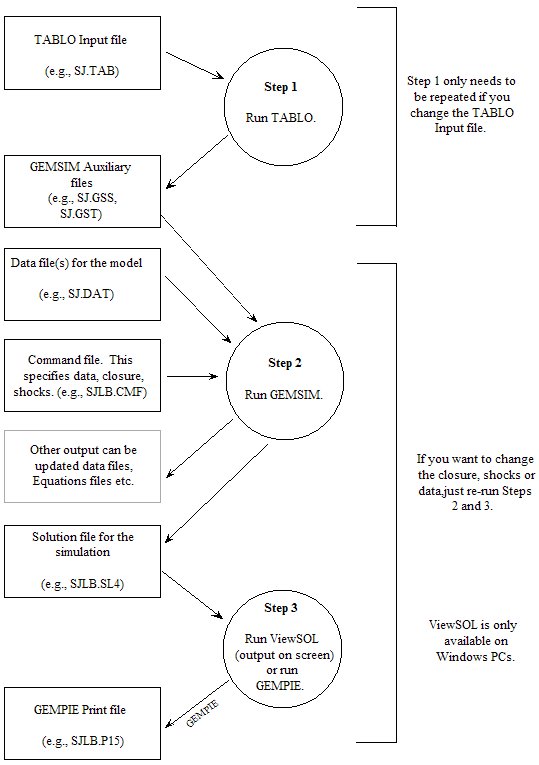

- 3.5.3 Executable-image method: using GEMSIM [gpd1.2.4.7]

- 3.5.4 Step 3 - View the Solution using ViewSOL [gpd1.2.viewsol]

- 3.6 The steps in carrying out a simulation [gpd1.2.5]

- 3.6.1 Steps 1 and 2 using a TABLO-generated program (source-code GEMPACK) [gpd1.2.5.1]

- 3.6.2 Steps 1 and 2 using GEMSIM [gpd1.2.5.2]

- 3.6.3 Other Simulations [gpd1.2.5.othsims]

- 3.7 Interpreting the results [gpd1.2.7]

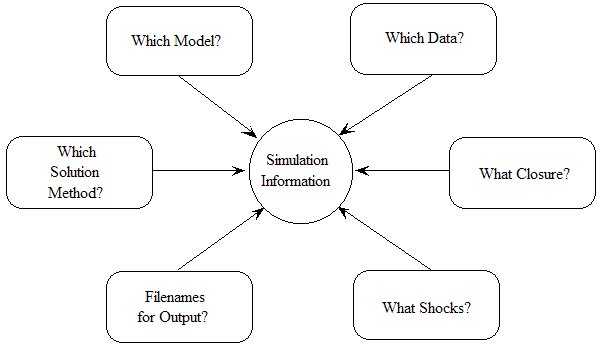

- 3.8 Specifying a simulation [gpd1.2.8]

- 3.9 The updated data - another result of the simulation [gpd1.2.9]

- 3.10 Preparing tables and graphs for a report [gpd1.2.10]

- 3.10.1 Example 1: Copying from ViewSOL to Spreadsheet and WordProcessor [gpd1.2.viewsolexport]

- 3.10.2 Example 2: Using the GEMPACK Program SLTOHT and Option SSS [gpd1.2.sltoht1]

- 3.10.3 Example 3: Using the Program SLTOHT and Option SES [gpd1.2.sltoht2]

- 3.10.4 Graphs [gpd1.2.10.1]

- 3.11 Changing the closure and shocks [gpd1.2.11]

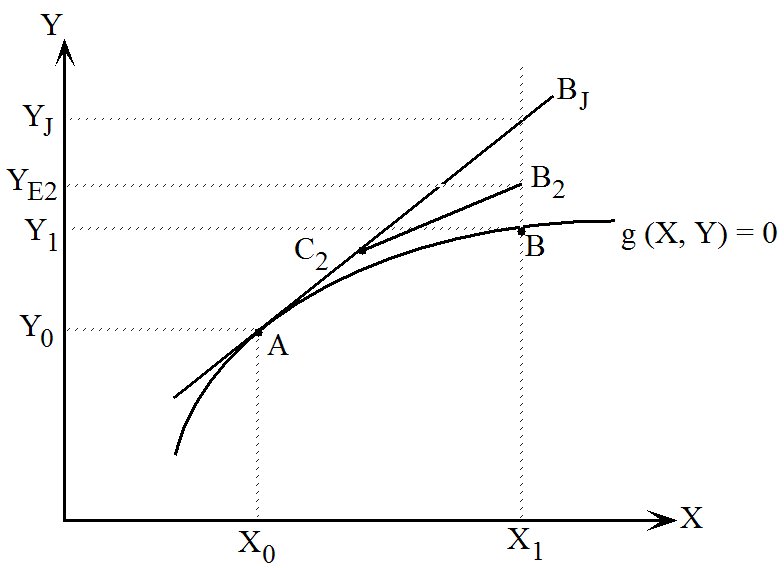

- 3.12 How Johansen and multi-step solutions are calculated [gpd1.2.13]

- 3.12.1 The linearized equations of a model [gpd1.2.13.1]

- 3.12.2 Johansen solutions [gpd1.2.13.2]

- 3.12.3 Multi-step simulations and accurate solutions of nonlinear equations [gpd1.2.13.3]

- 3.12.4 Smiling or frowning face numbers are reported [gpd5.9.2.1]

- 3.12.5 Fatal error if accuracy too low (GEMSIM and TG-programs) [gpd5.9.2.2]

- 3.13 GEMPACK programs - an overview [gpd1.2.14]

- 3.13.1 Working with TABLO input files [gpd1.2.14.1]

- 3.13.2 Carrying out simulations [gpd1.2.14.2]

- 3.13.3 Looking at, and processing, simulation results [gpd1.2.14.3]

- 3.13.4 Working with data [gpd1.2.14.4]

- 3.13.5 Windows modelling environments [gpd1.2.14.5]

- 3.13.6 Other programs for special tasks [gpd1.2.14.6]

- 3.13.7 Programs for use on non-Windows PCs [gpd1.2.14.7]

- 3.14 Different GEMPACK files [gpd1.2.15]

- 3.14.1 The most important files [gpd1.2.15.1]

- 3.14.2 Files for communication between programs [gpd1.2.15.2]

- 3.14.3 LOG files [gpd1.2.15.3]

- 3.14.4 Stored-input (STI) files [gpd1.2.15.4]

- 3.14.5 Files for power users [gpd1.2.15.5]

- 3.14.6 Summary of files [gpd1.2.15.7]

- 3.14.7 Work files [gpd1.2.15.6]

- 3.14.8 Files you can eventually delete [junkfiles]

- 3.15 For new users - what next? [gpd1.2.16]

- 3.1 An example simulation with stylized Johansen [gpd1.2.1]

- 4 Building or modifying models [gpd1.3]

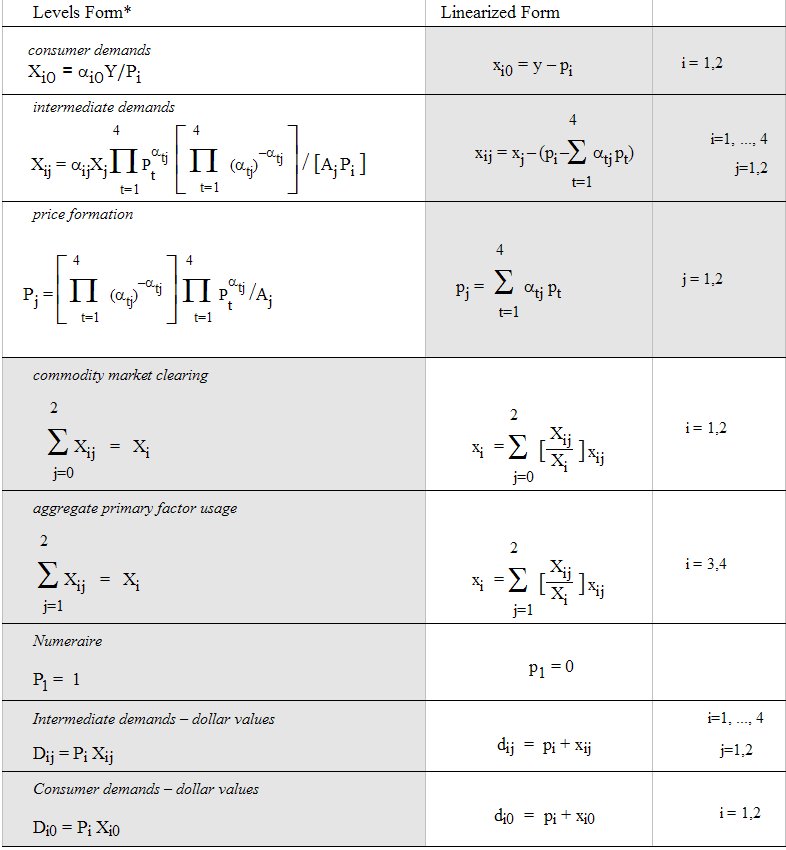

- 4.1 Writing down the equations of a model [gpd1.3.1]

- 4.2 Data requirements for the linearized equations [gpd1.3.2]

- 4.2.1 Data requirements for Stylized Johansen [gpd1.3.2.1]

- 4.3 Constructing the TABLO input file for a model [gpd1.3.3]

- 4.3.1 Viewing the TABLO input file [gpd1.3.3.1]

- 4.3.2 Constructing part of the TABLO input file for Stylized Johansen [gpd1.3.3.2]

- 4.3.3 "Mixed" TABLO input file for the Stylized Johansen model [gpd1.3.3.3]

- 4.3.4 Completing the TABLO input file for Stylized Johansen [gpd1.3.3.4]

- 4.3.5 Change or percentage-change variables [gpd1.3.3.5]

- 4.3.6 Variable or parameter ? [gpd1.3.3.6]

- 4.3.7 TABLO language - syntax and semantics [gpd1.3.3.7]

- 4.4 Linearized TABLO input files [gpd1.3.4]

- 4.4.1 A linearized TABLO input file for Stylized Johansen [gpd1.3.4.1]

- 4.4.2 Noteworthy features in the linearized TABLO input file [gpd1.3.4.2]

- 4.4.3 Analysing simulation results [gpd1.3.4.3]

- 4.4.4 Writing UPDATE statements [gpd1.3.4.4]

- 4.4.5 Numerical versions of linearized equations [gpd1.3.4.5]

- 4.4.6 Numerical examples of update statements [gpd1.3.4.6]

- 4.4.7 How equations are recalculated in a multi-step calculation [gpd1.3.4.7]

- 4.5 Levels TABLO input files [gpd1.3.5]

- 4.5.1 Levels TABLO input file for Stylized Johansen [gpd1.3.5.1]

- 4.6 TABLO linearizes levels equations automatically [gpd1.3.7]

- 4.7 Creating the TABLO input file and command files for your own model [gpd1.3.9]

- 4.7.1 Correcting errors in TABLO input files [gpd1.3.9.1]

- 4.7.2 Correcting errors in command files [gpd1.3.9.2]

- 5 Header Array files [harfiles]

- 5.0.1 Data on Header Array files [gpd4.3.1]

- 5.0.2 Array type [gpd4.3.1.1]

- 5.0.3 Set and element labelling on header array files [gpd4.5a]

- 5.0.4 Long names [gpd4.3.1.2]

- 5.0.5 File history and creation information [gpd9.7.5.3]

- 5.1 Ways to create or modify header array files [gpd4.3.2]

- 6 Constructing HAR files [gpd1.4]

- 6.1 Constructing the header array data file for Stylized Johansen [sjmkdat]

- 6.2 Editing the header array data file for Stylized Johansen [sjmoddat]

- 6.2.1 Other ViewHAR capabilities [gpd1.4.5.8]

- 6.3 Checking data is balanced [gpd1.4.7]

- 6.3.1 SJCHK.TAB to check balance for Stylized Johansen [gpd1.4.7.1]

- 6.3.2 SJCHK.TAB to check balance of updated data [gpd1.4.7.2]

- 6.3.3 GTAPVIEW for checking and summarising GTAP data [gpd1.4.7.3]

- 6.3.4 Checking the balance of ORANIG data [gpd1.4.7.4]

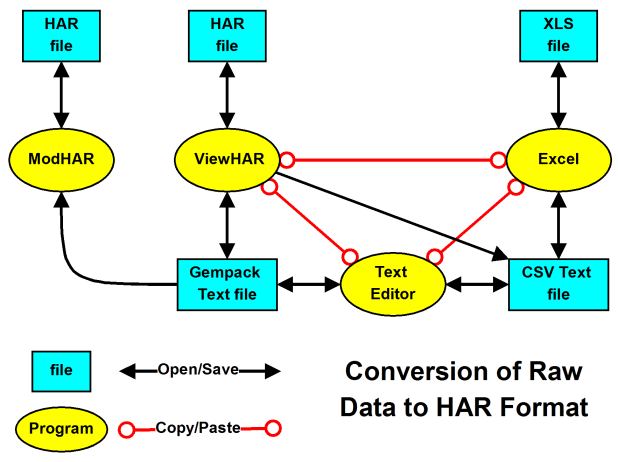

- 6.4 Which program to use in file conversion? [gpd1.4.8]

- 6.5 Further information [gpd1.4.9]

- 7 GEMPACK file types, names and suffixes [gpd1.5.8]

- 7.0.1 Files with system-determined suffixes [gpd1.5.8.1]

- 7.0.2 Suffixes of other files [gpd1.5.8.2]

- 7.0.3 Files -- binary, header array or text? [gpd1.5.8.3]

- 7.0.4 Why so many files? [gpd1.5.8.4]

- 7.1 Allowed file and directory names [gpd1.5.9]

- 7.1.1 File names containing spaces [gpd1.5.9.4]

- 7.1.2 Characters in stored-input files and command files [gpd1.5.9.5]

- 7.1.3 Files that could be deleted [cleanupfiles]

- 8 Overview of running TABLO and the TABLO language [gpd2.1]

- 8.1 Running TABLO on an existing model [gpd2.1.1]

- 8.1.1 Example models ORANIG01 and GTAP61 [gpd2.1.1.1]

- 8.1.2 TAB file and WFP/PGS on command line [gpd5.10.1.2]

- 8.1.3 Running TABLO from TABmate with no STI file [tablocode]

- 8.1.4 Preliminary pass to count statements [gpd2.1.1.2]

- 8.2 Compiling and linking TABLO-generated programs [gpd2.1.2]

- 8.3 Writing a TABLO input file for a new model [gpd2.1.4]

- 8.4 Modern ways of writing TABLO input files (on a PC) [gpd2.1.5]

- 8.4.1 Using TABmate [gpd2.1.5.1]

- 8.4.2 Using TABmate to find a closure and suggest substitutions [autoclosure]

- 8.4.3 Using TABmate to create a CMF file [autocmf]

- 8.4.4 Using TABmate to reformat your code [beautyparlour]

- 8.4.5 Using ViewHAR to write TABLO code for data manipulation [gpd2.1.5.2]

- 8.5 Condensing a large model [gpd2.1.6]

- 8.5.1 Condensation in STI file: a legacy technique [oldsticond]

- 8.1 Running TABLO on an existing model [gpd2.1.1]

- 9 Additional information about running TABLO [gpd2.2]

- 9.1 TABLO options [gpd2.2.1]

- 9.1.1 TABLO input file written on the auxiliary files [gpd2.2.1.1]

- 9.1.2 TABLO file and TABLO STI file stored on solution file [gpd2.2.1.2]

- 9.1.3 Specialised check options [gpd2.2.1.3]

- 9.1.4 Doing condensation or going to code generation [gpd2.2.1.4]

- 9.1.5 TABLO code options [gpd2.2.1.5]

- 9.1.6 Identifying and correcting syntax and semantic errors [gpd2.2.1.6]

- 9.2 TABLO linearizes levels equations automatically [gpd2.2.2]

- 9.2.1 Change or percentage-change associated linear variables [gpd2.2.2.1]

- 9.2.2 How levels variable statements are converted [gpd2.2.2.2]

- 9.2.3 How levels equation statements are converted [gpd2.2.2.3]

- 9.2.4 Linearizing levels equations [gpd2.2.2.4]

- 9.2.5 Linearizing a sum [gpd2.2.2.5]

- 9.2.6 Algorithm used by TABLO [gpd2.2.2.6]

- 9.2.7 TABLO can write a linearised TAB file [gpd9.7.19]

- 9.3 Reporting Newton error terms in models with levels equations [gpd5.9.14]

- 9.1 TABLO options [gpd2.2.1]

- 10 The TABLO language: basic syntax [gpd2.3]

- 10.1 SET [gpd2.3.1]

- 10.1.1 Set expressions (includes unions, intersections and complements) [gpd2.3.1.0]

- 10.1.2 Equal sets [gpd2.3.1.2]

- 10.1.3 Data-dependent sets [gpd2.3.1.3]

- 10.1.4 Set examples from ORANIG model [gpd2.3.1.5]

- 10.1.5 Set examples from GTAP model [gpd2.3.1.6]

- 10.1.6 Set products [gpd2.3.1.4]

- 10.1.7 Multidimensional sets and tuples [tuples]

- 10.1.8 Ranked sets [gpd2.3.1.6a]

- 10.2 SUBSET [gpd2.3.2]

- 10.2.1 Subset examples from ORANIG model [gpd2.3.2.1]

- 10.2.2 Subset examples from GTAP model [gpd2.3.2.2]

- 10.3 COEFFICIENT [gpd2.3.3]

- 10.4 VARIABLE [gpd2.3.4]

- 10.5 FILE [gpd2.3.5]

- 10.5.1 SSE output [gpd5.9.1]

- 10.6 READ [gpd2.3.6]

- 10.7 WRITE [gpd2.3.7]

- 10.8 FORMULA [gpd2.3.8]

- 10.9 EQUATION [gpd2.3.9]

- 10.9.1 FORMULA & EQUATION [gpd2.3.9.1]

- 10.10 UPDATE [gpd2.3.10]

- 10.11 ZERODIVIDE [gpd2.3.11]

- 10.11.1 More about zerodivides [gpd2.4.13]

- 10.12 DISPLAY [gpd2.3.12]

- 10.13 MAPPING [gpd2.3.13]

- 10.13.1 Formulas for mappings, and reading and writing mappings [gpd2.3.13.1]

- 10.13.2 Projection mappings from a set product [gpd2.3.13.2]

- 10.14 ASSERTION [gpd2.3.14]

- 10.15 TRANSFER [gpd2.3.15]

- 10.16 OMIT, SUBSTITUTE and BACKSOLVE [gpd2.3.16_17_18]

- 10.17 COMPLEMENTARITY [gpd2.3.19]

- 10.18 POSTSIM [gpd2.3.19a]

- 10.19 Setting default values of qualifiers [gpd2.3.20]

- 10.20 TABLO statement qualifiers - a summary [gpd2.3.21]

- 10.20.1 Spaces and qualifier syntax [gpd2.3.21.1]

- 10.1 SET [gpd2.3.1]

- 11 Syntax and semantic details [gpd2.4]

- 11.1 General notes on the TABLO syntax and semantics [gpd2.4.1]

- 11.1.1 TABLO statements [gpd2.4.1.1]

- 11.1.2 Lines of the TABLO input file [gpd2.4.1.2]

- 11.1.3 Upper and lower case [gpd2.4.1.3]

- 11.1.4 Comments [gpd2.4.1.4]

- 11.1.5 Strong comment markers [gpd2.4.1.5]

- 11.1.6 Reserved (special) characters [gpd2.4.1.6]

- 11.1.7 Chinese and accented characters [nonascii]

- 11.2 User defined input [gpd2.4.2]

- 11.2.1 Names [gpd2.4.2.1]

- 11.2.2 Abbreviating lists of set elements [gpd2.4.2.0]

- 11.2.3 Labelling information (text between hashes #) [gpd2.4.2.2]

- 11.2.4 Arguments: indices, set elements or index expressions [gpd2.4.2.3]

- 11.2.5 Index expressions with elements from run-time sets [gpd2.4.2.4]

- 11.3 Quantifiers and quantifier lists [gpd2.4.3]

- 11.4 Expressions used in equations, formulas and updates [gpd2.4.4]

- 11.4.1 Operations used in expressions [gpd2.4.4.1]

- 11.4.2 Brackets in expressions [gpd2.4.4.3]

- 11.4.3 Sums over sets in expressions [gpd2.4.4.2]

- 11.4.4 MaxS, MinS and Prod operators [gpd2.4.4.2a]

- 11.4.5 Conditional quantifiers and SUMs, PRODs, MAXS and MINS [gpd2.4.4.5]

- 11.4.6 Conditional expressions [gpd2.4.4.6]

- 11.4.7 Index-IN-Set condition for IF expressions [gpd2.4.4.6b]

- 11.4.8 Linear variables in expressions [gpd2.4.4.7]

- 11.4.9 Constants in expressions [gpd2.4.4.8]

- 11.4.10 Indices in expressions [gpd2.4.4.9]

- 11.4.11 Index-expression conditions [gpd2.4.4.10]

- 11.5 Functions [gpd2.4.4.4]

- 11.5.1 ID01 and ID0V functions [gpd2.4.4.4.1]

- 11.5.2 RANDOM function [gpd2.4.4.4.2]

- 11.5.3 Statistical functions [statistical]

- 11.5.4 NORMAL and CUMNORMAL functions [gpd2.4.4.4.3]

- 11.5.5 Functions for log-normal distribution [gpd9.7.9]

- 11.5.6 $POS function [gpd2.4.4.4.4]

- 11.5.7 ROUND, TRUNC0 and TRUNCB functions [gpd2.4.4.4.5]

- 11.6 Coefficients and levels variables [gpd2.4.5]

- 11.6.1 Coefficients -- what are they ? [gpd2.4.5.1]

- 11.6.2 Model parameters [gpd2.4.5.2]

- 11.6.3 Integer coefficients in expressions and elsewhere [gpd2.4.5.3]

- 11.6.4 Where coefficients and levels variables can occur [gpd2.4.5.4]

- 11.6.5 Reporting levels values when carrying out simulations [gpd2.4.5.5]

- 11.6.6 How do you see the pre-simulation and post-simulation levels values? [gpd2.4.5.6]

- 11.6.7 Specifying acceptable range of coefficients read or updated [gpd2.4.5.7]

- 11.7 Sets [gpd2.4.6]

- 11.7.1 Set size and set elements [gpd2.4.6.1]

- 11.7.2 Obsolete "maximum size" in SET statements [gpd2.4.6.2]

- 11.7.3 Set unions, intersections and equality [gpd2.4.6.3]

- 11.7.4 Set complements and relative complements [gpd2.4.6.4]

- 11.7.5 Longer set expressions [gpd2.4.6.4b]

- 11.7.6 Sets whose elements depend on data [gpd2.4.6.5]

- 11.7.7 Writing the elements of one set [gpd2.4.6.6]

- 11.7.8 Writing the elements of all (or many) sets [gpd2.4.6.7]

- 11.7.9 Empty sets [gpd2.4.6.8]

- 11.7.10 Reading set elements from a file [gpd2.4.6.9]

- 11.7.11 Creating element names for set products [gpd2.4.6.10]

- 11.8 Subsets [gpd2.4.7]

- 11.9 Mappings between sets [gpd2.4.8]

- 11.9.1 Defining set mapping values [gpd2.4.8.1]

- 11.9.2 Checking values of a mapping [gpd2.4.8.2]

- 11.9.3 Insisting that a set mapping be onto [gpd2.4.8.3]

- 11.9.4 Using set mappings [gpd2.4.8.4]

- 11.9.5 Set Mappings can only be used in index expressions [gpd2.4.8.5]

- 11.9.6 Two or more set mappings in an index expression [gpd2.4.8.6]

- 11.9.7 Set mappings in arguments [gpd2.4.8.7]

- 11.9.8 Set mappings on LHS of a Formula [mapfmlhs]

- 11.9.9 Other semantics for mappings [gpd2.4.8.8]

- 11.9.10 Writing the values of set mappings [gpd2.4.8.9]

- 11.9.11 Reading part of a set mapping BY_ELEMENTS [gpd2.4.8.10]

- 11.9.12 Mapping values can be given by values of other mapping or index [gpd2.4.8.11]

- 11.9.13 Writing a set mapping to a text file [gpd2.4.8.12]

- 11.9.14 Long name when a set mapping is written to a header array file [gpd2.4.8.14]

- 11.9.15 ViewHAR and set mappings [gpd2.4.8.15]

- 11.10 Files [gpd2.4.9]

- 11.10.1 Text files [gpd2.4.9.1]

- 11.11 Reads, writes and displays [gpd2.4.10]

- 11.11.1 How data is associated with coefficients [gpd2.4.10.1]

- 11.11.2 Partial reads, writes and displays [gpd2.4.10.2]

- 11.11.3 Seeing the values of integer coefficients of dimension 3 or higher [gpd5.8.5]

- 11.11.4 FORMULA(INITIAL)s [gpd2.4.10.3]

- 11.11.5 Coefficient initialisation [gpd2.4.10.4]

- 11.11.6 Display files [gpd2.4.10.5]

- 11.11.7 Transferring long names when executing write statements [gpd2.4.10.6]

- 11.11.8 Reads only if header exists -- read qualifier ifheaderexists [gpd5.8.1]

- 11.12 Updates [gpd2.4.11]

- 11.12.1 Purpose of updates [gpd2.4.11.1]

- 11.12.2 Which type of update? [gpd2.4.11.2]

- 11.12.3 What if an initial value is zero ? [gpd2.4.11.3]

- 11.12.4 UPDATE semantics [gpd2.4.11.4]

- 11.12.5 Example 1 of deriving update statements: sum of two flows [gpd2.4.11.5]

- 11.12.6 Example 2 of deriving update statements: powers of taxes [gpd2.4.11.6]

- 11.12.7 Example 3 of deriving update statements: an update (change) statement [gpd2.4.11.7]

- 11.12.8 Writing updated values from FORMULA(INITIAL)s [gpd2.4.11.8]

- 11.13 Transfer statements [gpd2.4.12]

- 11.13.1 XTRANSFER Statements on command files [gpd2.4.12.1]

- 11.14 Complementarity semantics [gpd2.4.14]

- 11.14.1 Condensation and complementarities [gpd2.4.14.1]

- 11.14.2 Linking the complementarity to existing linear variables [gpd2.4.14.2]

- 11.15 The RAS_MATRIX function [gpd9.7.10]

- 11.15.1 The RAS procedure [gpd9.7.10.1]

- 11.15.2 An intuitive way to think of the RAS [gpd9.7.10.2]

- 11.15.3 RAS iterations [gpd9.7.10.3]

- 11.15.4 RAS syntax in TABLO [gpd9.7.10.4]

- 11.15.5 The RAS report [gpd9.7.10.5]

- 11.15.6 Warnings of potential problems [gpd9.7.10.6]

- 11.16 Ordering [gpd2.4.15]

- 11.16.1 Ordering of the input statements [gpd2.4.15.1]

- 11.16.2 Order in which reads, formulas, equations and updates are performed [gpd2.4.15.6]

- 11.17 TABLO input files with no equations [gpd2.4.16]

- 11.18 Loops in TAB files [loop-tab]

- 11.1 General notes on the TABLO syntax and semantics [gpd2.4.1]

- 12 TABLO statements for post-simulation processing [gpd5.2]

- 12.1 Simple examples of PostSim statements [postsimexm]

- 12.2 PostSim TAB file statements, syntax and semantic rules [gpd5.2.5]

- 12.2.1 Statements allowed in post-simulation sections of a TAB file [gpd5.2.5.1]

- 12.2.2 Syntax and semantics for formulas in PostSim part of TAB file [gpd5.2.5.2]

- 12.2.3 Semantics for reads in PostSim part of TAB file [gpd5.2.5.3]

- 12.2.4 Semantics for assertions in PostSim part of TAB file [gpd5.2.5.4]

- 12.2.5 Semantics for zerodivides in PostSim part of TAB file [gpd5.2.5.5]

- 12.2.6 Default statements and certain qualifiers not relevant in PostSim part [gpd5.2.5.6]

- 12.2.7 PostSim sets, subsets, coefficients not allowed in the ordinary part [gpd5.2.5.7]

- 12.2.8 PostSim qualifier for write or display [gpd5.2.5.8]

- 12.2.9 Implications for condensation [gpd5.2.5.9]

- 12.3 PostSim extra statements in a command file [gpd5.2.6]

- 12.4 PostSim parts of TAB file and AnalyseGE [gpd5.2.9]

- 12.4.1 Coefficients or expressions selected from the TABmate form [gpd5.2.9.1]

- 12.4.2 Expressions evaluated from the AnalyseGE form [gpd5.2.9.2]

- 12.4.3 UDC files now redundant? [gpd5.2.9.3]

- 12.5 Technical details: When and how is post-simulation part done? [gpd5.2.8]

- 12.6 Advantages of PostSim processing in TAB file [gpd5.2.4]

- 13 Ranking sets via data (finding winners and losers) [gpd5.3]

- 13.1 Example: identifying winners and/or losers [gpd5.3.1]

- 13.1.1 Ranking example OG01PS.TAB [gpd5.3.1.1]

- 13.2 Ranked set statements -- syntax and semantics [gpd5.3.2]

- 13.3 Converting non-intertemporal sets to intertemporal sets and vice versa [gpd5.3.3]

- 13.3.1 TABLO statements [gpd5.3.3.1]

- 13.3.2 Household income example in detail [gpd5.3.3.2]

- 13.1 Example: identifying winners and/or losers [gpd5.3.1]

- 14 Condensing models [condense]

- 14.1 Condensing models [gpd1.3.8]

- 14.1.1 Substituting out variables [gpd1.3.8.1]

- 14.1.2 Condensation examples [gpd1.3.8.2]

- 14.1.3 Backsolving for variables [gpd1.3.8.3]

- 14.1.4 Condensation examples using backsolving [gpd1.3.8.4]

- 14.1.5 Results on the solution file in condensation examples [gpd1.3.8.5]

- 14.1.6 Should all substitutions be backsolves? [gpd1.3.8.6]

- 14.1.7 Omitting variables [gpd1.3.8.7]

- 14.1.8 Automatic substitutions [gpd1.3.8.7b]

- 14.1.9 Condensation actions can be put on the TABLO input file [gpd1.3.8.8]

- 14.1.10 More details about condensation and substituting variables [gpd2.2.3]

- 14.1.11 Looking ahead to substitution when creating TABLO input files [gpd2.2.3.1]

- 14.1.12 System-initiated formulas and backsolves [gpd2.2.3.2]

- 14.1.13 Treating substitutions as backsolves - option ASB [gpd2.2.3.4]

- 14.1.14 Condensation statements on a TABLO input file [gpd2.2.4]

- 14.1.15 Ignoring TABLO condensation [gpd2.2.4.1]

- 14.1.16 Condensation information file [gpd9.7.8]

- 14.1.17 Submatrix nonzeros information file [smnzinffile]

- 14.1.18 CondOpt tool to find optimal condensation [condopt]

- 14.1 Condensing models [gpd1.3.8]

- 15 Verifying economic models [gpd2.6]

- 15.1 Is balanced data still balanced after updating? [gpd2.6.1]

- 15.1.1 Balance after n-steps [gpd2.6.1.1]

- 15.1 Is balanced data still balanced after updating? [gpd2.6.1]

- 16 Intertemporal models [gpd2.7]

- 16.1 Introduction to intertemporal models [gpd2.7.1]

- 16.2 Intertemporal sets [gpd2.7.2]

- 16.3 Use an INTEGER coefficient to count years [gpd2.7.3]

- 16.4 Enhancements to semantics for intertemporal models [gpd2.7.4]

- 16.5 Recursive formulas over intertemporal sets [gpd2.7.5]

- 16.6 Constructing an intertemporal data set satisfying all model equations [gpd2.7.6]

- 16.6.1 Example using the CRTS intertemporal model [gpd2.7.6.1]

- 16.7 ORANI-INT: A multi-sector rational expectations model [gpd2.7.7]

- 17 Less obvious examples of the TABLO language [gpd2.8]

- 17.1 Flexible formula for the size of a set [gpd2.8.1]

- 17.2 Adding across time periods in an intertemporal model [gpd2.8.2]

- 17.3 Conditional functions or equations [gpd2.8.3]

- 17.3.1 Other methods [gpd2.8.3b]

- 17.4 Aggregating data and simulation results [gpd2.8.4]

- 17.5 Use of special sets and coefficients [gpd2.8.5]

- 18 Linearizing levels equations [gpd2.9]

- 18.1 Differentiation rules used by TABLO [gpd2.9.1]

- 18.2 Linearizing equations by hand [gpd2.9.2]

- 18.2.1 General procedure - change differentiation [gpd2.9.2.1]

- 18.2.2 Rules to use [gpd2.9.2.2]

- 18.2.3 Linearizing equations in practice [gpd2.9.2.3]

- 18.2.4 Linearizing using standard references [gpd2.9.2.4]

- 18.3 Linearized equations on information file [gpd2.9.3]

- 18.4 Linearized equations via AnalyseGE [gpd2.9.3b]

- 18.5 Be careful when using linearized equations [gpd2.9.4]

- 18.6 Keep formulas and equations in synch [gpd2.9.4b]

- 19 Overview of simulation reference chapters 20 to 35 [gpd3.1]

- 20 Command (CMF) files [gpd3.2]

- 20.1 Data manipulation using GEMSIM or TABLO-generated programs [gpd3.2.2]

- 20.2 Simulations using GEMSIM or TABLO-generated programs [gpd3.2.1]

- 20.3 SAGEM simulations [gpd3.2.3]

- 20.4 File names [gpd3.2.4]

- 20.4.1 Naming the command file [gpd3.2.4.1]

- 20.4.2 File names containing spaces [gpd3.2.4.2]

- 20.5 Using the command file stem for names of other output files [gpd3.2.5]

- 20.5.1 Default: solution file stem = command file stem [gpd3.2.5.1]

- 20.5.2 Default: log file stem = command file stem [gpd3.2.5.2]

- 20.5.3 Default: display file stem = command file stem [gpd3.2.5.3]

- 20.5.4 Using <CMF> in command files [gpd3.2.5.4]

- 20.5.5 Position of <CMF> in command file statements [gpd9.7.16]

- 20.6 Log files and reporting CPU time [gpd3.2.6]

- 20.7 General points about command file statements [gpd3.2.7]

- 20.8 Eliminating syntax errors in GEMPACK command files [gpd3.2.8]

- 20.9 Replaceable parameters in CMF files [cmfparm]

- 20.9.1 The newcmf= option [newcmf]

- 21 TABLO input files and auxiliary files [gpd3.3]

- 21.1 GEMSIM and GEMSIM auxiliary files [gpd3.3.1]

- 21.2 TABLO-generated programs and auxiliary files [gpd3.3.2]

- 21.3 How are the names of the auxiliary files determined? [gpd3.3.3]

- 21.4 Check that auxiliary files are correct [gpd3.3.4]

- 21.4.1 Model information (MIN) file date [gpd3.3.4.1]

- 21.5 Carrying out simulations on other machines [gpd3.3.5]

- 21.5.1 Copying TABLO-generated programs to other PCs [gpd3.3.5.1]

- 21.5.2 GEMPACK licence may be required [gpd3.3.5.2]

- 22 CMF statements for data files, updated data files and display files [gpd3.4]

- 22.1 Data files and logical files [gpd3.4.1]

- 22.1.1 Input and output data files [gpd3.4.1.1]

- 22.1.2 Using the same filename twice in CMF files [dupefilenames]

- 22.2 Updated data files [gpd3.4.2]

- 22.2.1 Naming updated files [gpd3.4.2.1]

- 22.2.2 Updated data read from the terminal [gpd3.4.2.2]

- 22.2.3 Intermediate data files [gpd3.4.2.3]

- 22.2.4 Long names on updated data files [gpd3.4.2.4]

- 22.3 Display files [gpd3.4.3]

- 22.3.1 Options for display files [gpd3.4.3.1]

- 22.4 Checking set and element information when reading data [gpd3.4.4]

- 22.5 Names of intermediate data files [gpd3.4.5]

- 22.1 Data files and logical files [gpd3.4.1]

- 23 Specifying the closure [gpd3.5.1a]

- 23.1 The closure [gpd3.5.1]

- 23.1.1 Miniature ORANI model [gpd3.5.1.1]

- 23.2 Specifying the closure via a command file [gpd3.5.2]

- 23.2.1 Listing exogenous variable names and components. [gpd3.5.2.1]

- 23.2.2 Closure swap statements [gpd3.5.2.1a]

- 23.2.3 Order of command file statements can be important [gpd3.5.2.4]

- 23.2.4 Closure specified using data files [gpd3.5.2.5]

- 23.2.5 Using a saved closure [gpd3.5.2.2]

- 23.2.6 List of exogenous/endogenous variables when closure is invalid [gpd3.5.2.6]

- 23.2.7 Initial closure check and singular matrices [gpd3.5.2.7]

- 23.1 The closure [gpd3.5.1]

- 24 Specifying the shocks [gpd3.5.5]

- 24.1 Specifying simple shocks [gpd3.5.5.1]

- 24.2 Reading nonuniform shocks from a file [gpd3.5.5.2]

- 24.3 Using the "select from" shock statement [gpd3.5.5.1b]

- 24.4 Shock components must usually be in increasing order [gpd3.5.5.3]

- 24.5 When to use "select from" [gpd9.7.17]

- 24.6 Other points about shocks [gpd3.5.5.4x]

- 24.6.1 Using SEENV to generate shock statements [gpd3.5.5.4seenv]

- 24.6.2 Shock statements using component numbers and/or lists of values [gpd3.5.5.4b]

- 24.6.3 Shocking a variable with no components exogenous [gpd3.5.5.5]

- 24.7 FINAL_LEVEL statements : alternatives to shock statements [gpd3.5.6]

- 24.8 Shocks from a coefficient [shcoeff]

- 24.8.1 Examples [shcoeff1]

- 24.8.2 Motivation [shcoeff2]

- 24.8.3 Formal rules [shcoeff3]

- 24.8.4 Fine print [shcoeff4]

- 24.9 CHANGE or PERCENT_CHANGE shock statements [gpd3.5.7]

- 24.10 Rate% shock statement [gpd5.9.16]

- 24.10.1 Fine print about rate% shock statements [gpd5.9.16.1]

- 24.11 Shock files: fine print [gpd3.5.8b]

- 24.12 Checking the closure and shocks [gpd3.5.9]

- 24.13 Levels variable names can be used in command files [gpd3.5.10]

- 24.14 Clarification concerning shocks on a command file [gpd3.5.11]

- 24.14.1 Shock statement when only some components are exogenous [gpd3.5.11.1]

- 24.14.2 At least one shock statement is required. [gpd3.5.11.2]

- 25 Actions in GEMSIM and TABLO-generated programs [gpd3.6]

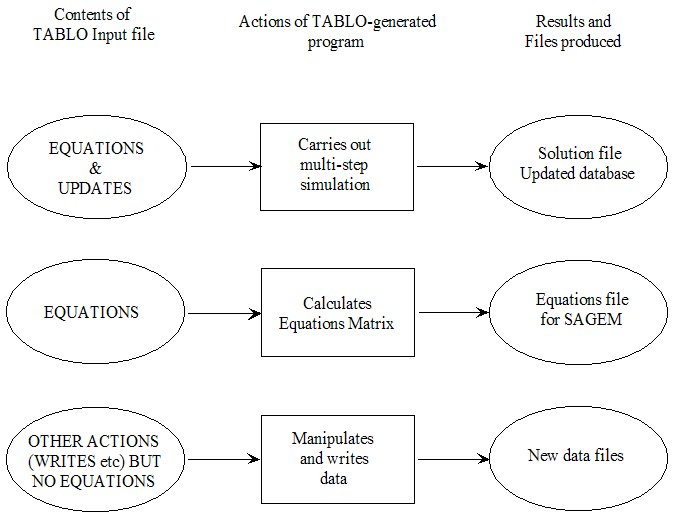

- 25.1 Possible actions in GEMSIM and TABLO-generated programs [gpd3.6.1]

- 25.1.1 Multi-step simulations with economic models [gpd3.6.1.1]

- 25.1.2 Creating an equations file [gpd3.6.1.2]

- 25.1.3 Other actions [gpd3.6.1.3]

- 25.1.4 Data manipulation [gpd3.6.1.4]

- 25.1.5 "extra" (TABLO-like) actions [gpd3.6.1.5]

- 25.1.6 Checking the closure and shocks [gpd3.6.1.6]

- 25.1.7 Postsim passes and actions [gpd3.6.1.6a]

- 25.1.8 Controlling whether and how the actions are carried out [gpd3.6.1.7]

- 25.1.9 Some reads and FORMULAS may be omitted [gpd3.6.1.8]

- 25.1.10 Writes and displays at all steps of a multi-step simulation [gpd3.6.1.9]

- 25.1.11 Echoing activity [gpd3.6.1.10]

- 25.1.12 Writes to the terminal [gpd3.6.1.11]

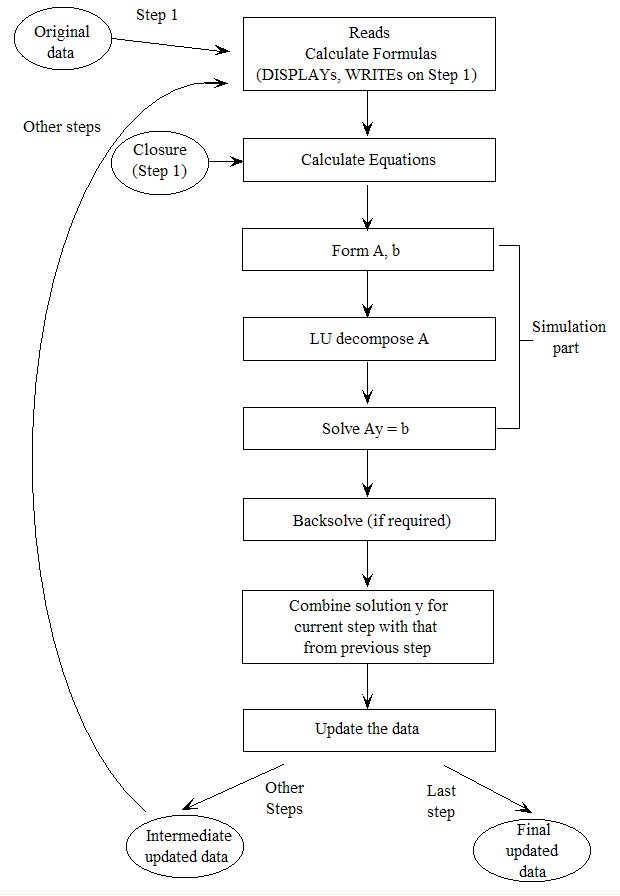

- 25.2 How these programs carry out multi-step simulations [gpd3.6.2]

- 25.2.1 Processing the closure and shocks [gpd3.6.2.1]

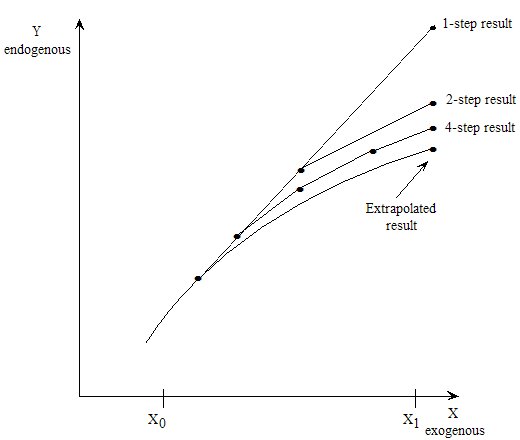

- 25.2.2 Results of a 4-step simulation looked at in detail [gpd3.6.2.2]

- 25.2.3 Do the accurate results satify the linear equations? [gpd3.6.2.3]

- 25.3 Assertions [gpd3.6.3]

- 25.4 Range tests [gpd3.6.4]

- 25.4.1 Specifying the allowed range for a coefficient or levels variable [gpd3.6.4.1]

- 25.4.2 Tests carried out [gpd3.6.4.2]

- 25.4.3 An example [gpd3.6.4.3]

- 25.4.4 Associated statements in command files [gpd3.6.4.4]

- 25.5 Transfer statements [gpd3.6.5]

- 25.6 TABLO-like statements in command files [gpd3.6.6]

- 25.6.1 TABLO-like checks of extra statements [gpd3.6.6.1]

- 25.6.2 Qualifiers [gpd3.6.6.2]

- 25.6.3 Other points [gpd3.6.6.3]

- 25.7 Coefficients are fully initialised by default [gpd3.6.7]

- 25.8 How accurate are arithmetic calculations? [gpd3.6.8]

- 25.8.1 Example 1 - GTAPVIEW [gpd3.6.8.1]

- 25.8.2 Example 2 - checking balance of ORANIG data [gpd3.6.8.2]

- 25.1 Possible actions in GEMSIM and TABLO-generated programs [gpd3.6.1]

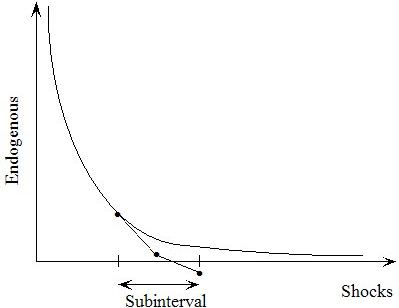

- 26 Multi-step solution methods [gpd3.7]

- 26.1 What method and how many steps to use ? [gpd3.7.1]

- 26.1.1 Example - GTAP liberalization simulation [gpd3.7.1.1]

- 26.1.2 Command file statements for method and steps [gpd3.7.1.2]

- 26.2 Extrapolation accuracy summaries and files [gpd3.7.2]

- 26.2.1 Accuracy estimates and summaries for variables [gpd3.7.2.1]

- 26.2.2 Accuracy estimates and summaries for updated data [gpd3.7.2.2]

- 26.2.3 Extrapolation accuracy files [gpd3.7.2.3]

- 26.2.4 Accuracy summary information is on solution file [gpd5.9.11]

- 26.2.5 Fine print on how extrapolation is done [gpd5.9.10]

- 26.2.6 Command file statements affecting extrapolation accuracy reports [gpd3.7.2.4]

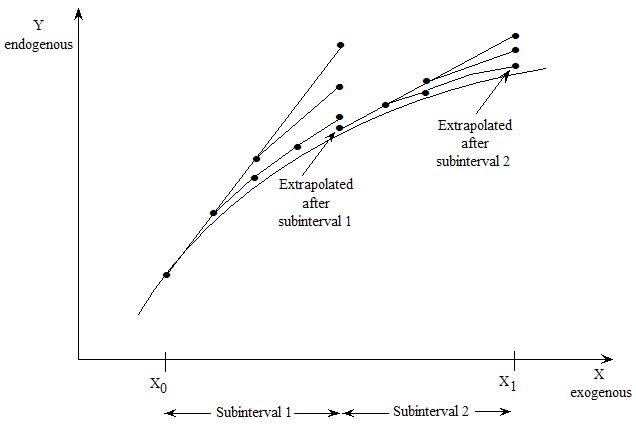

- 26.3 Splitting a simulation into several subintervals [gpd3.7.3]

- 26.4 Automatic accuracy for simulations [gpd3.7.4]

- 26.4.1 Specifying which solutions to use [gpd3.7.4.1]

- 26.4.2 Incompatibilities [gpd3.7.4.2]

- 26.4.3 Adaptive stepsize method [gpd3.7.4.4]

- 26.4.4 Stopping if subintervals become too small [gpd3.7.4.5]

- 26.4.5 Controlling which values are tested [gpd3.7.4.6]

- 26.4.6 Checking progress during a run [gpd3.7.4.7]

- 26.5 Runge-Kutta solution methods [rungekutta]

- 26.5.1 Adaptive stepsize for Runge-Kutta [adaptiverk]

- 26.5.2 Which RK integrator to choose? [whichrk]

- 26.6 Newton's method for levels models [gpd3.7.5]

- 26.6.1 Newton's method and ORANIF [gpd3.7.5.1]

- 26.6.2 Convergence [gpd3.7.5.2]

- 26.6.3 General advice [gpd3.7.5.3]

- 26.6.4 Newton's method is not reliable with mixed models [gpd3.7.5.4]

- 26.6.5 Command file statements for Newton's method [gpd5.9.13]

- 26.7 Homotopy methods and levels equations [gpd3.7.6]

- 26.7.1 Example 1 - solving a system of levels equations [gpd3.7.6.1]

- 26.7.2 Example 2 - finding an intertemporal data set [gpd3.7.6.2]

- 26.7.3 Example 3 - use when adding behaviour to a model [gpd3.7.6.3]

- 26.7.4 Formal documentation about ADD_HOMOTOPY [gpd3.7.6.4]

- 26.7.5 Names for homotopy variables added to levels equations [gpd5.8.6]

- 26.7.6 ADD_HOMOTOPY and NOT_ADD_HOMOTOPY default statements [gpd3.7.6.5]

- 26.8 Options for saving solution and updated data files [gpd3.7.7]

- 26.9 Solutions report perturbations of the initial values [gpd3.7.8]

- 26.9.1 Change differentiation keeps a constant error [gpd3.7.8.1]

- 26.9.2 How can homotopies help? [gpd3.7.8.2]

- 26.1 What method and how many steps to use ? [gpd3.7.1]

- 27 Solution (SL4) files [gpd3.8]

- 27.1 Command file statements related to solution files [gpd3.8.1]

- 27.2 Contents of solution files [gpd3.8.2]

- 27.2.1 TAB and STI files stored on solution file [gpd3.8.2.1]

- 27.2.2 Command file stored on solution file [gpd3.8.2.2]

- 27.3 Displaying Levels results [gpd3.8.3]

- 27.4 SEQ files [seqfiles]

- 28 SLC, UDC, AVC and CVL files [slcfiles]

- 29 Subtotals via GEMSIM or TABLO-generated programs [gpd3.11]

- 29.1 Subtotals from GEMSIM and TABLO-generated programs [gpd3.11.1]

- 29.2 Meaning of subtotals results [gpd3.11.2]

- 29.3 Subtotal example [gpd3.11.3]

- 29.4 More substantial examples [gpd3.11.4]

- 29.5 Processing subtotal results [gpd3.11.5]

- 29.6 Subtotals results depend on the path [gpd3.11.6]

- 30 Solving models and simulation time [gpd3.12]

- 30.1 How GEMPACK programs solve linear equations [gpd3.12.1]

- 30.1.1 GEMPACK 12 LU factorization [gp12lu]

- 30.1.2 Sparse-linear-equation solving routines MA48 and MA28 [gpd3.12.1.1]

- 30.1.3 Which to use? MA48 or MA28 [gpd3.12.1.2]

- 30.1.4 Accuracy warnings from iterative refinement [gpd9.7.6]

- 30.1.5 Iterative refinement and residual ratios [itref2]

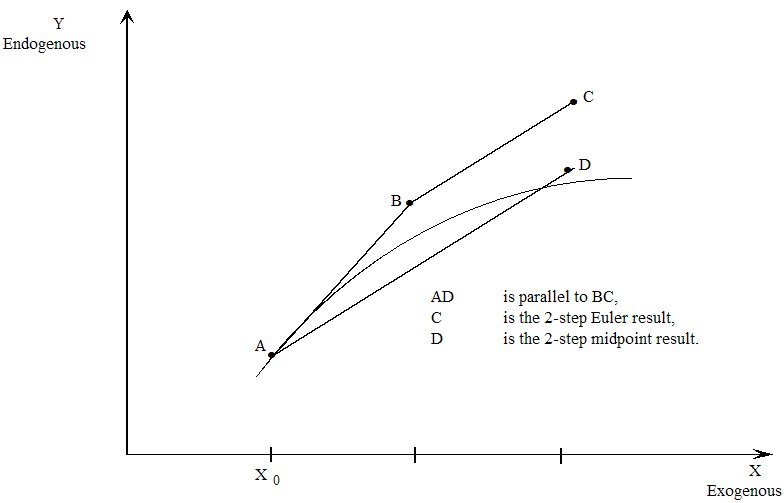

- 30.2 Gragg's method and the midpoint method [gpd3.12.2]

- 30.3 Reusing pivots [gpd3.12.3]

- 30.4 Ignoring/keeping zero coefficients [gpd3.12.5]

- 30.4.1 More details about the "iz1=no" option [gpd3.12.5a]

- 30.5 LU decomposition [gpd5.5]

- 30.6 Equation solving problems [gpd3.12.6]

- 30.7 Work files [gpd3.12.7]

- 30.1 How GEMPACK programs solve linear equations [gpd3.12.1]

- 31 Solving a model in parallel on a machine with two or more processors [gpd9.2]

- 31.1 You need plenty of memory [gpd9.2.1]

- 31.2 Telling the program to run in parallel [gpd9.2.2]

- 31.3 Restrictions [gpd9.2.3]

- 31.4 Servants work in their own directories [gpd9.2.4]

- 31.5 Master log file is complete [gpd9.2.5]

- 31.6 When the servants start and finish [gpd9.2.6]

- 31.6.1 If there are several subintervals [gpd9.2.6.1]

- 31.6.2 If there are complementarity statements [gpd9.2.6.2]

- 31.6.3 If using automatic accuracy [gpd9.2.6.3]

- 31.7 If a fatal error occurs [gpd9.2.7]

- 31.8 Fine print [gpd9.2.8]

- 31.9 Some elapsed times [gpd9.2.9]

- 31.9.1 Comments on the elapsed times reported [gpd9.2.9.1]

- 31.10 Using master/servant under RunDynam [gpd9.2.10]

- 32 Memory management [gpd3.13]

- 32.1 Automatic memory allocation [gpd3.13.1]

- 32.2 Preliminary pass [gpd3.13.2]

- 32.3 MMNZ: Allocating memory for the LU decomposition [gpd3.13.3]

- 32.4 Reporting memory used by TABLO-generated programs and GEMSIM [gpd3.13.4]

- 32.4.1 RunDynam manages MMNZ start values [rundynam.mmnz]

- 32.4.2 TGMEM1 and TGMEM2 parts of memory [gpd3.13.4.1]

- 33 Options for GEMSIM and TABLO-generated programs [gpd3.14]

- 33.1 Options affecting simulations [gpd3.14.1]

- 33.2 Options affecting extrapolation accuracy summaries and files [gpd3.14.2]

- 33.3 Saving updated values from all FORMULA(INITIAL)s [gpd3.14.3]

- 33.4 Options affecting the actions carried out [gpd3.14.4]

- 33.5 Options affecting how writes and displays are carried out [gpd3.14.5]

- 33.6 Options affecting CPU and activity reporting [gpd3.14.6]

- 33.7 Special options for SAGEM [gpd3.14.7]

- 33.7.1 SAGEM options [gpd3.14.7.1]

- 34 Run-time errors [gpd3.15]

- 34.1 Simulation fails because of a singular LHS matrix [gpd3.15.1]

- 34.1.1 Trying to fix a singular matrix problem [gpd3.15.1.1]

- 34.2 Structurally singular matrices [gpd3.15.2]

- 34.3 Reporting arithmetic errors [gpd9.7.4]

- 34.3.1 Updates -- fine print [gpd9.7.4.1]

- 34.3.2 Other arithmetic errors [gpd9.7.4.2]

- 34.4 Suppressing arithmetic errors in GEMSIM and TABLO-generated programs [gpd5.9.15]

- 34.1 Simulation fails because of a singular LHS matrix [gpd3.15.1]

- 35 Summary of command file statements [gpd3.18]

- 35.1 Command files for GEMSIM and TABLO-generated programs [gpd3.18.1]

- 35.2 Command file statements [gpd3.18.2]

- 35.2.1 Method and steps [gpd3.18.2.1]

- 35.2.2 Checking set and element labelling when reading data from HAR files [gpd3.18.2.2]

- 35.2.3 Checking element names when reading sets from HAR files [gpd3.18.2.3]

- 35.2.4 Automatic accuracy [gpd3.18.2.4]

- 35.2.5 Data files [gpd3.18.2.5]

- 35.2.6 Equations files and BCV files [gpd3.18.2.6]

- 35.2.7 Other files [gpd3.18.2.7]

- 35.2.8 TABLO-like statements in command files [gpd3.18.2.8]

- 35.2.9 Closure related [gpd3.18.2.9]

- 35.2.10 Harwell parameter [gpd3.18.2.10]

- 35.2.11 Verbal description [gpd3.18.2.11]

- 35.2.12 Shock related [gpd3.18.2.12]

- 35.2.13 Cumulatively-retained rows [gpd3.18.2.13]

- 35.2.14 Variables on extrapolation accuracy file [gpd3.18.2.14]

- 35.2.15 Subtotals [gpd3.18.2.15]

- 35.2.16 Complementarity [gpd3.18.2.16]

- 35.2.17 LU decomposition and advanced use of pivots [cmfsum.pivots]

- 35.2.18 Newton's Method [cmfsum.newton]

- 35.2.19 Memory management [cmfsum.sharemem]

- 35.2.20 GEMSIM and TABLO-generated program options [gpd3.18.2.17]

- 35.2.21 Automatic Homogeneity Testing [cmfautohomog]

- 35.2.22 For debugging [gpd3.18.2.18]

- 35.2.23 General points [gpd3.18.2.19]

- 35.3 Complete command file example for GEMSIM and TABLO-generated programs [gpd3.18.3]

- 35.4 Command files for SAGEM [gpd3.18.4]

- 35.5 Command file statements for SAGEM [sagem.cmfs]

- 35.5.1 Equations file, Solution file and Harwell Parameter [gpd3.18.4.1]

- 35.5.2 Verbal description (mandatory) [gpd3.18.4.2]

- 35.5.3 Closure related [gpd3.18.4.3]

- 35.5.4 Shock related [gpd3.18.4.4]

- 35.5.5 Individually-retained and cumulatively-retained rows/columns [gpd3.18.4.5]

- 35.5.6 Subtotals [gpd3.18.4.6]

- 35.5.7 SAGEM options [gpd3.18.4.7]

- 35.5.8 General points [gpd3.18.4.8]

- 35.6 Complete command file example for SAGEM [gpd3.18.5]

- 36 GEMPACK Windows programs [gpd4.2]

- 36.1 WinGEM -- the Windows interface to GEMPACK [gpd4.2.1]

- 36.2 ViewHAR for looking at or modifying data on a header array file [gpd4.2.2]

- 36.2.1 The Charter [gpd4.2.2.1]

- 36.3 ViewSOL for looking at simulation results on a solution file [gpd4.2.3]

- 36.4 TABmate for working on TABLO input files or command files [gpd4.2.4]

- 36.5 RunGEM for model users [gpd4.2.5]

- 36.5.1 TABmate and RunGEM for model developers? [gpd4.2.5.1]

- 36.5.2 Preparing a model for use with RunGEM [gpd4.2.5.2]

- 36.5.3 Systematic sensitivity analysis via RunGEM [gpd4.2.5.3]

- 36.5.4 Systematic sensitivity analysis [gpd4.2.5.4]

- 36.6 AnalyseGE -- assisting in the analysis of simulation results [gpd4.2.6]

- 36.6.1 Using AnalyseGE with data manipulation TAB files [gpd4.2.6.1]

- 36.6.2 Using AnalyseGE when a simulation crashes [gpd4.2.6.2]

- 36.6.3 Subtotals in AnalyseGE [gpd5.6.1]

- 36.6.4 Decomposing selected expression [gpd5.6.2]

- 36.6.5 Analysing closure problems with AnalyseGE [anal-clos]

- 36.7 RunDynam for recursive dynamic models [gpd4.2.7]

- 37 Command-line programs for working with header array files [gpd4.4]

- 37.1 SEEHAR: prepare a print file or CSV spreadsheet file [gpd4.4.1]

- 37.1.1 SEEHAR - options [gpd4.4.1.1]

- 37.1.2 Displays and labelled output from SEEHAR into spreadsheets [gpd4.4.1.2]

- 37.1.3 GAMS output from SEEHAR, TABLO-generated programs and GEMSIM [gpd4.4.1.3]

- 37.1.4 Converting header array files to data bases - SEEHAR option SQL [gpd4.4.1.4]

- 37.1.5 SEEHAR command-line options [seehar12]

- 37.2 CMPHAR: comparing data on header array files [gpd4.4.2]

- 37.2.1 CMPHAR report [gpd4.4.2.1]

- 37.2.2 Significant differences [gpd4.4.2.2]

- 37.2.3 Comparing solution files [gpd4.4.2.3]

- 37.2.4 CMPHAR files on the command line [gpd5.10.1.4]

- 37.2.5 CMPHAR reports difference metric values [gpd5.10.5]

- 37.3 CMBHAR: combining similar header array files [gpd4.4.4]

- 37.4 SUMHAR: summarising a header array file [gpd4.4.3]

- 37.4.1 SUMHAR command-line options [sumhar12]

- 37.5 MergeHAR: combining headers from two HAR files into one [mergehar]

- 37.6 DiffHAR: compare two header or SL4 files [diffhar]

- 37.7 DumpSets: extract sets from HAR file [dumpsets]

- 37.1 SEEHAR: prepare a print file or CSV spreadsheet file [gpd4.4.1]

- 38 Syntax of GEMPACK text data files [gpd4.6]

- 38.1 The "how much data" information [gpd4.6.1]

- 38.1.1 Real or integer data [gpd4.6.1.1]

- 38.1.2 Examples of "how much data" information [gpd4.6.1.2]

- 38.1.3 Character string data [gpd4.6.1.3]

- 38.1.4 Header and longname [gpd4.6.1.4]

- 38.1.5 Coefficient [gpd4.6.1.5]

- 38.1.6 Comments [gpd4.6.1.6]

- 38.1.7 Complete example of GEMPACK text data file [gpd4.6.1.7]

- 38.2 Data values in text files [gpd4.6.2]

- 38.2.1 Array sizes [gpd4.6.2.1]

- 38.2.2 Actual data values of the array [gpd4.6.2.2]

- 38.2.3 Row order [gpd4.6.2.3]

- 38.2.4 Column order [gpd4.6.2.4]

- 38.2.5 Spreadsheet order [gpd4.6.2.5]

- 38.2.6 Real numbers [gpd4.6.2.6]

- 38.2.7 Repeated values in arrays [gpd4.6.2.7]

- 38.2.8 Character strings [gpd4.6.2.8]

- 38.2.9 Multi-dimensional real arrays [gpd4.6.2.9]

- 38.2.10 Maximum length of lines of a file [gpd4.6.2.10]

- 38.2.11 Differences between row order and spreadsheet style data [gpd4.6.2.11]

- 38.2.12 Text editors and text files [gpd4.6.2.12]

- 38.2.13 Advice for CSV files [gpd4.6.2.13]

- 38.2.14 Element name/number labels [gpd4.6.2.14]

- 38.3 Using TABLO to read, manipulate, and write HAR or text files [gpd1.4.4]

- 38.1 The "how much data" information [gpd4.6.1]

- 39 SLTOHT: processing simulation results [gpd4.8]

- 39.1 Introducing SLTOHT [gpd4.8.1]

- 39.1.1 Ways to run SLTOHT [runningsltoht]

- 39.2 Using SLTOHT to make a HAR file [sltohthar]

- 39.3 Mapping files [gpd4.8.3]

- 39.3.1 Header mapping file [gpd4.8.6.1]

- 39.4 Running SLTOHT from the command line [gpd5.10.1.3]

- 39.5 Running SLTOHT interactively [gpd4.8.4]

- 39.5.1 Choosing solutions for output [gpd4.8.4.1]

- 39.6 Undefined solution values [gpd4.8.4.2]

- 39.7 Levels results - options NLV, LV and SHL [gpd4.8.5]

- 39.8 How SLTOHT arranges several solutions in HAR file output [gpd4.8.6]

- 39.9 GEMPACK text data file output using options SIR, SIC or SS [gpd4.8.7]

- 39.10 Other SLTOHT options [gpd4.8.9]

- 39.10.1 SLTOHT option VAI: variable arguments ignored in HAR output [gpd4.8.6.2]

- 39.10.2 SLTOHT option NEL [gpd4.8.9nel]

- 39.10.3 SLTOHT option SEP [gpd4.8.9sep]

- 39.10.4 SLTOHT option HSS: produce both HAR and spreadsheet outputs [gpd4.8.9hss]

- 39.10.5 SLTOHT option SHK: shock CMF output [gpd4.8.8]

- 39.10.6 SLTOHT option DES [gpd4.8.9des]

- 39.1 Introducing SLTOHT [gpd4.8.1]

- 40 SLTOHT for spreadsheets [gpd4.9]

- 40.1 Spreadsheet mapping files [gpd4.9.1]

- 40.1.1 Example of a spreadsheet mapping file (option SSS) [gpd4.8.3.1]

- 40.1.2 Syntax rules for spreadsheet mapping files [gpd4.9.1.1]

- 40.2 Options spreadsheet (SS) and short spreadsheet (SSS) [gpd4.9.2]

- 40.3 Output of tables using options SES or SSE [gpd4.9.3]

- 40.3.1 Output of levels results using option SES [gpd4.9.3.1]

- 40.3.2 Three and higher dimensional arrays of results [gpd4.9.3.2]

- 40.4 Side-by-side results (SES, SS, SSS or SSE output) [gpd4.9.4]

- 40.5 Tables suitable for producing graphs [gpd4.9.5]

- 40.1 Spreadsheet mapping files [gpd4.9.1]

- 41 ACCUM and DEVIA : accumulation and differences [gpd4.10]

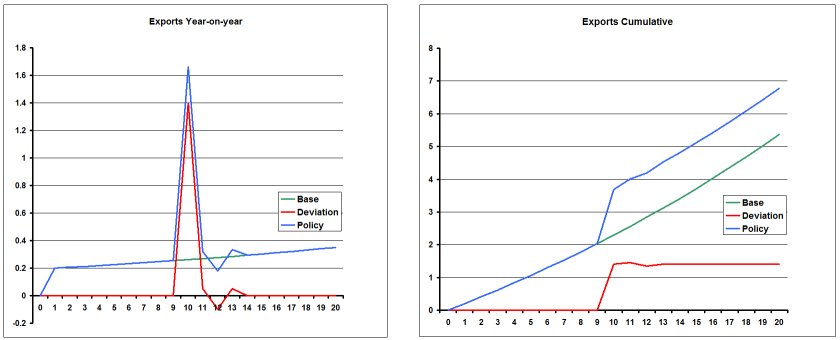

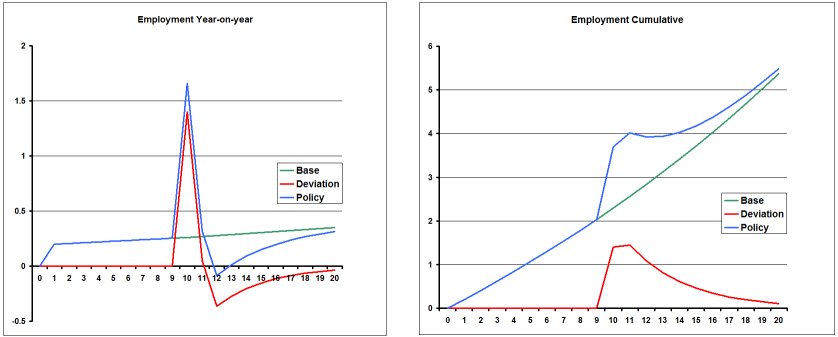

- 41.1 Use with dynamic forecasting models [gpd4.10.1]

- 41.2 ACCUM [gpd4.10.2]

- 41.2.1 Columns showing totals and averages [gpd4.10.2.1]

- 41.2.2 Year-on-year results or accumulated results (option ACC) [gpd4.10.2.2]

- 41.2.3 Accumulated indexes (option ACI) [gpd4.10.2.3]

- 41.2.4 Handling subtotals results in ACCUM [gpd4.10.2.4]

- 41.3 Using DEVIA to prepare a spreadsheet table for the differences [gpd4.10.3]

- 41.3.1 Making sure DEVIA knows change/percent-change variables (option SOL) [gpd4.10.3.1]

- 41.3.2 Year-on-year differences (option NAC) [gpd4.10.3.2]

- 41.3.3 Subtotals results in DEVIA [gpd4.10.3.3]

- 41.3.4 Do subtotals for policy shocks add up to the policy deviation? [dyndevsubtot]

- 41.4 Suppressing arithmetic errors in ACCUM, DEVIA and CMBHAR [gpd5.10.3]

- 42 Hands-on tutorials for models supplied with GEMPACK [gpd8.1]

- 42.1 Overview of chapters 43 to 46 [gpd8.1b]

- 43 Getting started with GEMPACK via WinGEM [gpd8.2]

- 43.1 GEMPACK examples for new users [gpd8.2.1]

- 43.2 Locating the example files [gpd8.2.2]

- 43.2.1 Editing text files in WinGEM [gpd8.2.2.1]

- 43.3 Examples using the Stylized Johansen model SJ [gpd8.2.4]

- 43.3.1 Starting WinGEM [gpd8.2.4.1]

- 43.3.2 Preparing a directory for model SJ [gpd8.2.4.2]

- 43.3.3 Setting the working directory [gpd8.2.4.3]

- 43.3.4 Looking at the data directly using ViewHAR [gpd8.2.4.4]

- 43.3.5 TABLO-generated program or GEMSIM? [gpd8.2.4.5]

- 43.3.6 The example simulation using a TABLO-generated program [gpd8.2.4.6]

- 43.3.7 The example simulation using GEMSIM [gpd8.2.4.7]

- 43.3.8 Source-code version : use GEMSIM or TABLO-generated program? [gpd8.2.4.8]

- 43.3.9 The updated data -- another result of the simulation [gpd8.2.4.9]

- 43.3.10 Several Johansen simulations [gpd8.2.4.10]

- 43.3.11 Changing the closure and shocks [gpd8.2.4.11]

- 43.3.12 Correcting errors in TABLO input files [gpd8.2.4.12]

- 43.3.13 Creating the base data header array file [gpd8.2.4.13]

- 43.3.14 Modifying data on a header array file [gpd8.2.4.14]

- 43.3.15 Condensing the model [gpd8.2.4.15]

- 43.3.16 Transferring simulation results to a spreadsheet using SLTOHT [gpd8.2.4.16]

- 43.3.17 Using SEEHAR to look at data [gpd8.2.4.17]

- 43.3.18 Analysing simulation results using AnalyseGE [gpd8.2.4.18]

- 43.3.19 What next ? [gpd8.2.4.19]

- 43.4 Miniature ORANI model examples [gpd8.2.5]

- 43.4.1 Preparing a directory for model MO [gpd8.2.5.1]

- 43.4.2 Set the working directory [gpd8.2.5.2]

- 43.4.3 Examine the database for MO [gpd8.2.5.3]

- 43.4.4 Implementing the model MO using TABLO [gpd8.2.5.4]

- 43.4.5 Simulation using the command file MOTAR.CMF [gpd8.2.5.5]

- 43.4.6 Several Johansen simulations using SAGEM [gpd8.2.5.6]

- 43.4.7 Homogeneity test using SAGEM [gpd8.2.5.7]

- 43.4.8 Modifying the closure for MO [gpd8.2.5.8]

- 43.5 Examples for global trade analysis Project model GTAP94 [gpd8.2.6]

- 43.5.1 Examining the GTAP data directly [gpd8.2.6.1]

- 43.5.2 A GTAP3x3 simulation reducing one distortion [gpd8.2.6.2]

- 43.5.3 Implementation of GTAP3x3 [gpd8.2.6.3]

- 43.5.4 Running a simulation with condensed GTAP3x3 [gpd8.2.6.4]

- 43.5.5 GTAP multi-fibre agreement simulation with a 10x10 aggregation [gpd8.2.6.5]

- 43.5.6 Implementation of GTAP1010 [gpd8.2.6.6]

- 43.5.7 Running a simulation with condensed GTAP1010 [gpd8.2.6.7]

- 43.5.8 Running ViewSOL to look at the results [gpd8.2.6.8]

- 43.5.9 Comparison of times for GEMSIM and TG program [gpd8.2.6.9]

- 43.5.10 GTAP APEC liberalization (including results decomposition) [gpd8.2.6.10]

- 43.5.11 Carrying out the APEC liberalization simulation (with subtotals) [gpd8.2.6.11]

- 43.5.12 Looking at the results via ViewSOL [gpd8.2.6.12]

- 43.6 Examples for global trade analysis Project model GTAP61 [gpd8.2.7]

- 43.6.1 Preparing a directory for model GTAP61 [gpd8.2.7.1]

- 43.6.2 Set the working directory [gpd8.2.7.2]

- 43.6.3 Examine the TAB file [gpd8.2.7.3]

- 43.6.4 View the data files for GTAP61 [gpd8.2.7.4]

- 43.6.5 Implement the model using TABLO [gpd8.2.7.5]

- 43.6.6 Running a simulation with condensed GTAP61 [gpd8.2.7.6]

- 43.7 Examples with the ORANIG98 model [gpd8.2.8]

- 43.7.1 Preparing a directory for model ORANIG98 [gpd8.2.8.1]

- 43.7.2 Set the working directory [gpd8.2.8.2]

- 43.7.3 Examine the TAB file and the data [gpd8.2.8.3]

- 43.7.4 Implement the model using TABLO [gpd8.2.8.4]

- 43.7.5 Running a simulation with condensed ORANIG98 [gpd8.2.8.5]

- 43.7.6 Comparison of times for GEMSIM and TG program [gpd8.2.8.6]

- 43.7.7 Decomposition of simulation results [gpd8.2.8.7]

- 43.8 Examples with the ORANIG01 model [gpd8.2.9]

- 43.8.1 Preparing a directory for model ORANIG01 [gpd8.2.9.1]

- 43.8.2 Set the working directory [gpd8.2.9.2]

- 43.8.3 Examine the TAB file and data [gpd8.2.9.3]

- 43.8.4 Implement the model using TABLO [gpd8.2.9.4]

- 43.8.5 Running simulations with ORANIG01 [gpd8.2.9.5]

- 43.9 Examples with the ORANIF model [gpd8.2.10]

- 43.9.1 Preparing a directory for model ORANIF [gpd8.2.10.1]

- 43.9.2 Set the working directory [gpd8.2.10.2]

- 43.9.3 Examine the TAB file and the data [gpd8.2.10.3]

- 43.9.4 Implement the model using TABLO [gpd8.2.10.4]

- 43.9.5 Running a simulation with condensed ORANIF [gpd8.2.10.5]

- 43.9.6 Comparison of times for GEMSIM and TG program [gpd8.2.10.6]

- 43.10 Other example models [gpd8.2.11]

- 43.11 Building your own models [gpd8.2.12]

- 43.12 Using RunGEM for simulations [gpd8.2.13]

- 44 Command prompt: hands-on computing [gpd8.3]

- 44.1 Examples using the Stylized Johansen model SJ [gpd8.3.1]

- 44.1.1 Preparing a directory for model SJ [gpd8.3.1.1]

- 44.1.2 Looking at the data directly [gpd8.3.1.2]

- 44.1.3 An example simulation with Stylized Johansen [gpd8.3.1.3]

- 44.1.4 The example simulation using a TABLO-generated program [gpd8.3.1.4]

- 44.1.5 The example simulation using GEMSIM [gpd8.3.1.5]

- 44.1.6 Source-code version : use GEMSIM or TABLO-generated program? [gpd8.3.1.6]

- 44.1.7 The updated data - another result of the simulation [gpd8.3.1.7]

- 44.1.8 Preparing tables and graphs for a report [gpd8.3.1.8]

- 44.1.9 Changing the closure and shocks [gpd8.3.1.9]

- 44.1.10 Several simulations using SAGEM [gpd8.3.1.10]

- 44.1.11 Condensing the model [gpd8.3.1.13]

- 44.2 Miniature ORANI model examples [gpd8.3.2]

- 44.2.1 Preparing a directory for model MO [gpd8.3.2.1]

- 44.2.2 Implementation of the model MO using TABLO [gpd8.3.2.2]

- 44.2.3 Simulation using the TABLO-generated program MO (or GEMSIM) [gpd8.3.2.3]

- 44.2.4 Several simulations at once with SAGEM [gpd8.3.2.4]

- 44.2.5 Homogeneity test using SAGEM [gpd8.3.2.5]

- 44.2.6 Modifying a closure [gpd8.3.2.6]

- 44.3 Other models supplied [gpd8.3.3]

- 44.4 Working with TABLO input files [gpd8.3.4]

- 44.1 Examples using the Stylized Johansen model SJ [gpd8.3.1]

- 45 Using RunGEM for simulations [gpd8.5]

- 45.1 Stylized Johansen [gpd8.5.1]

- 45.1.1 Preparing the model files for RunGEM [gpd8.5.1.1]

- 45.1.2 Starting RunGEM [gpd8.5.1.2]

- 45.1.3 Modifying the closure and shocks [gpd8.5.1.3]

- 45.2 ORANIG98 model [gpd8.5.2]

- 45.2.1 Prepare the model files for RunGEM [gpd8.5.2.1]

- 45.2.2 Starting RunGEM [gpd8.5.2.2]

- 45.2.3 Wage cut simulation [gpd8.5.2.3]

- 45.2.4 Long run simulation [gpd8.5.2.4]

- 45.3 Preparing models for use by others with RunGEM [gpd8.5.3]

- 45.4 TABmate + RunGEM [gpd8.5.4]

- 45.5 RunGEM for students [gpd8.5.5]

- 45.1 Stylized Johansen [gpd8.5.1]

- 46 Using AnalyseGE to analyse simulation results [gpd8.6]

- 46.1 Analysing a Stylized Johansen simulation [gpd8.6.1]

- 46.1.1 Starting AnalyseGE [gpd8.6.1.1]

- 46.1.2 Looking at the shocks for the simulation [gpd8.6.1.2]

- 46.1.3 Real GDP in Stylized Johansen [gpd8.6.1.3]

- 46.1.4 What happens to real GDP from the income side? [gpd8.6.1.4]

- 46.1.5 Summary of real GDP analysis from income side [gpd8.6.1.5]

- 46.1.6 Real GPD from the income side via equation E_realva [gpd8.6.1.6]

- 46.1.7 Real GDP from the expenditure side [gpd8.6.1.7]

- 46.1.8 Demand for factors in each sector - A first look [gpd8.6.1.8]

- 46.1.9 What happens to the prices of labor and capital? [gpd8.6.1.9]

- 46.1.10 Demand for factors in each sector - A final look [gpd8.6.1.10]

- 46.1.11 Prices of the factors again [gpd8.6.1.11]

- 46.1.12 What happens to the prices of the commodities? [gpd8.6.1.12]

- 46.1.13 What happens to household demand for the commodities? [gpd8.6.1.13]

- 46.1.14 What happens to total demand for the commodities? [gpd8.6.1.14]

- 46.1.15 Results for factor prices - why opposite signs? [gpd8.6.1.15]

- 46.1.16 Conclusion [gpd8.6.1.16]

- 46.1.17 Proof that real GDP and price indices are equal from both sides [gpd8.6.1.17]

- 46.2 What next ? [gpd8.6.2]

- 46.1 Analysing a Stylized Johansen simulation [gpd8.6.1]

- 47 Print edition ends here [endprint]

- 48 Working with GEMPACK command-line programs [gpd1.5]

- 48.1 Responding to prompts [gpd1.5.1]

- 48.1.1 Responding to prompts (upper case or lower case) [gpd1.5.1.1]

- 48.1.2 Default response to questions and prompts [gpd1.5.1.2]

- 48.1.3 How the current directory affects filename responses [gpd1.5.1.3]

- 48.1.4 How the current directory affects filenames in command files [gpd1.5.1.4]

- 48.1.5 Directories must already exist [gpd1.5.1.5]

- 48.1.6 GEMPACK programs echo current directory [gpd1.5.1.6]

- 48.2 Comments in input from the terminal [gpd1.5.2]

- 48.3 Interactive and batch operation, stored-input and log files [gpd1.5.3]

- 48.3.1 Invalid input when using options 'sif' or 'asi' [gpd1.5.3.1]

- 48.3.2 Differences between batch and interactive program dialogues [gpd1.5.3.2]

- 48.3.3 Terminal output and log files [gpd1.5.3.3]

- 48.3.4 Interrupting command-line programs [gpd6.7.1.4]

- 48.3.5 Controlling screen output from command-line programs [gpd6.7.1.5]

- 48.3.6 LOG files and early errors [gpd1.5.3.4]

- 48.4 Other program options [gpd1.5.4]

- 48.4.1 Other options common to all programs [gpd1.5.4.1]

- 48.4.2 Options specific to different programs [gpd1.5.4.2]

- 48.5 Specifying various files on the command line [gpd1.5.5]

- 48.5.1 command-line option "-los" (alternative to "-lon") [gpd1.5.5.1]

- 48.5.2 command-line option "-lic" to specify the GEMPACK licence [gpd1.5.5.2]

- 48.5.3 Abbreviations for LOG file name on command line [gpd1.5.5.3]

- 48.5.4 The command line with other programs [gpd5.10.1.2a]

- 48.1 Responding to prompts [gpd1.5.1]

- 49 Miscellaneous information [miscstuff]

- 49.1 Details about file creation information [gpd9.7.5]

- 49.2 Programs report elapsed time [gpd9.7.2]

- 49.3 Windows PCs, Fortran compilers and memory management [gpd1.5.6]

- 49.3.1 64-bit processors and operating systems on PCs [gpd9.4]

- 49.3.2 Large-Address-Aware (LAA) programs [laa-exe]

- 49.3.3 Memory limits with Release 11/12 of GEMPACK [gpd9.4.1]

- 49.3.4 Other size limits [gpd9.4.1.1]

- 49.3.5 Limits for GEMSIM [gpd9.4.1.2]

- 49.4 Error messages [gpd1.5.7]

- 49.5 Programs set exit status [gpd9.7.20.2]

- 50 Code options when running TABLO [gpd2.5]

- 50.0.1 Code options in TABLO affecting the possible actions [gpd2.5.1.1]

- 50.0.2 Code option in TABLO affecting compilation speed [gpd2.5.1.2]

- 50.0.3 Other code options in TABLO [gpd2.5.1.4]

- 51 Simulations for models with complementarities [gpd3.16]

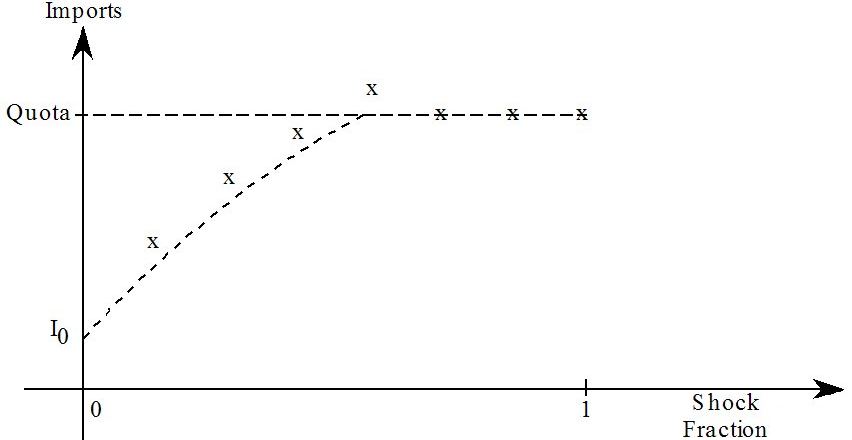

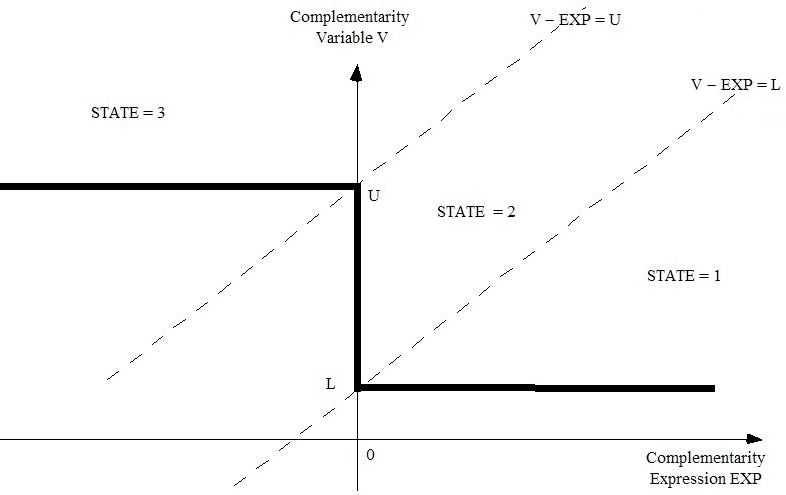

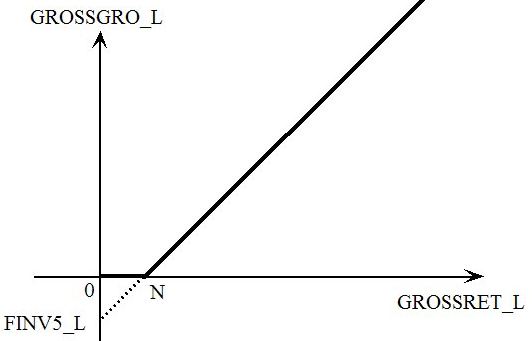

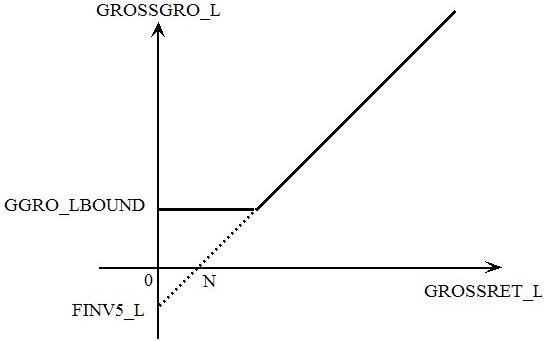

- 51.1 The basic ideas [gpd3.16.1]

- 51.1.1 If only we knew the post-simulation states [gpd3.16.1.1]

- 51.1.2 Finding the post-simulation states [gpd3.16.1.2]

- 51.1.3 Combining the two ideas [gpd3.16.1.3]

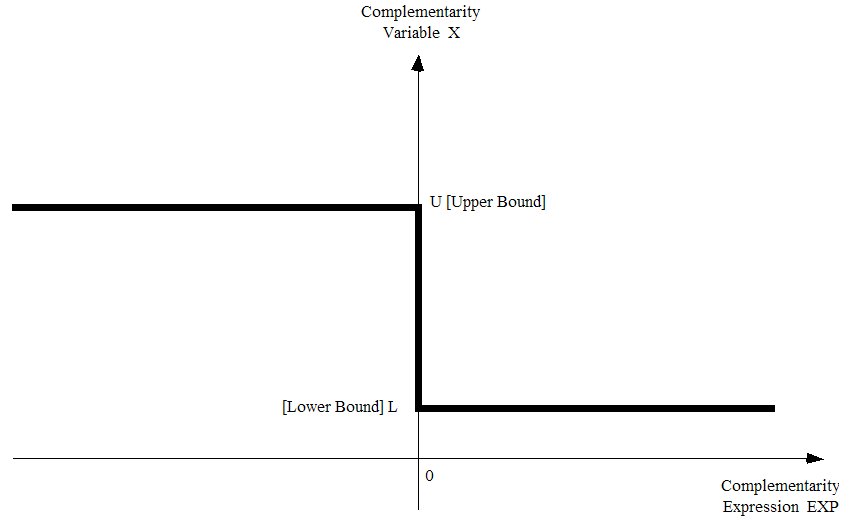

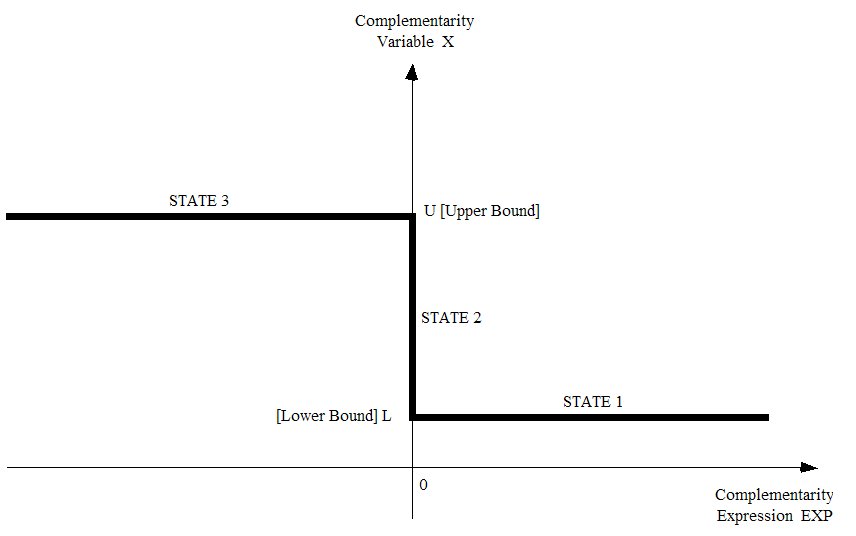

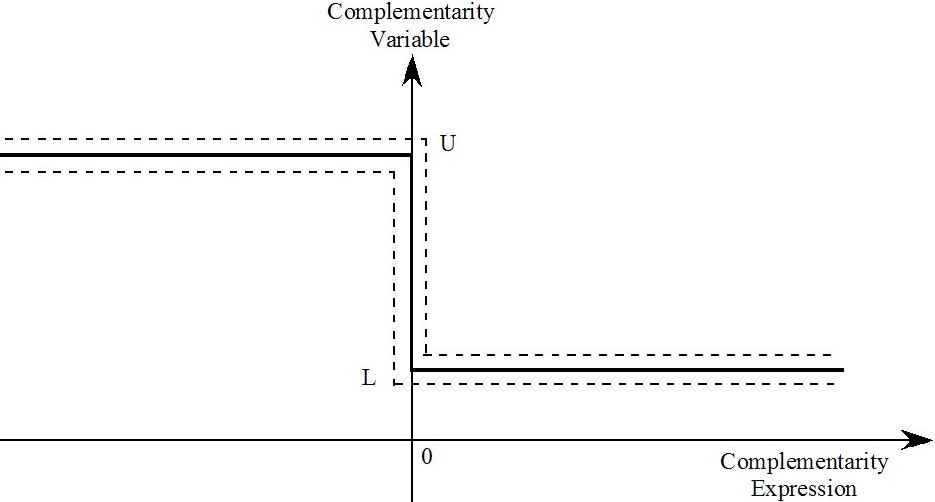

- 51.2 States of the complementarity [gpd3.16.2]

- 51.2.1 Complementarities with only one bound [gpd3.16.2.1]

- 51.3 Writing the TABLO code for complementarities [gpd3.16.3]

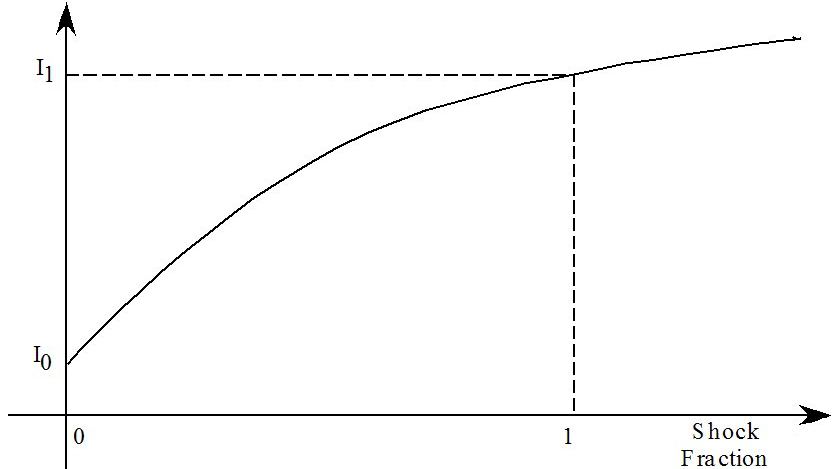

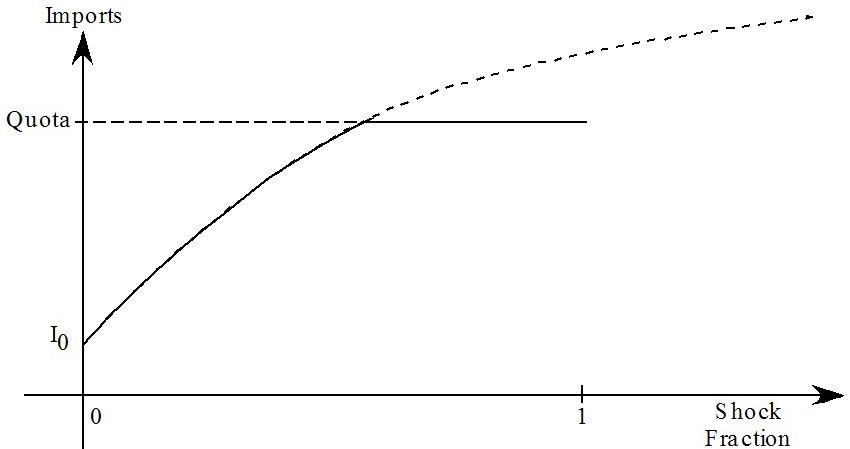

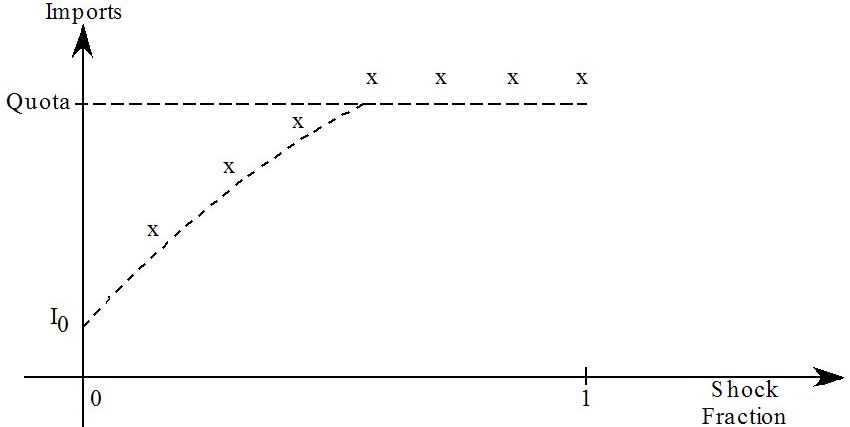

- 51.3.1 Import quota example [gpd3.16.3.1]

- 51.3.2 Example: MOIQ (miniature ORANI with import quotas) [gpd3.16.3.2]

- 51.3.3 Specifying bounds for the complementarity variable [gpd3.16.3.3]

- 51.3.4 Scale the complementarity expression if possible [gpd3.16.3.4]

- 51.3.5 Levels variables are required [gpd3.16.3.5]

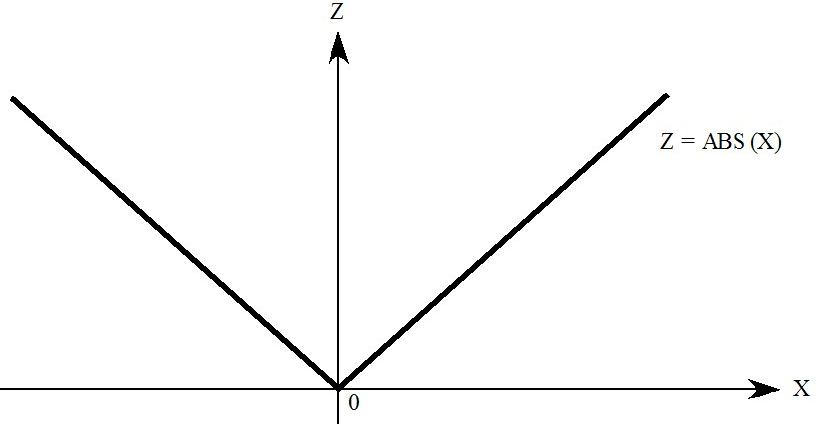

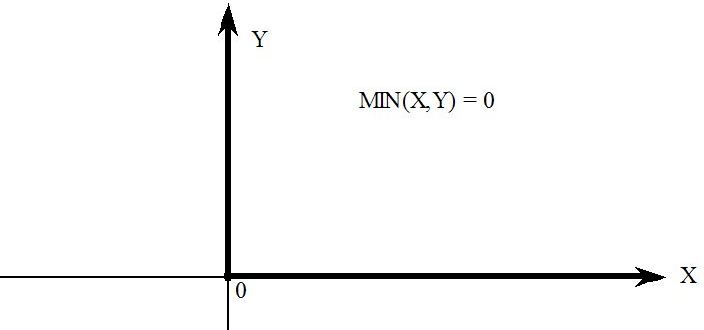

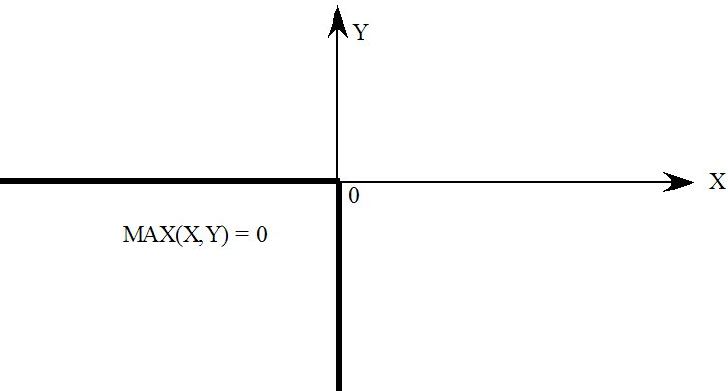

- 51.3.6 Complementarities are related to MAX and MIN [gpd3.16.3.6]

- 51.3.7 Equivalent ways of expressing complementarities with a single bound [gpd3.16.3.7]

- 51.4 Hands-on examples with MOIQ [gpd3.16.4]

- 51.4.1 Decreasing a quota volume - command file MOIQ1C.CMF [gpd3.16.4.1]

- 51.4.2 Decreasing both quota volumes - command file MOIQ1D.CMF [gpd3.16.4.2]

- 51.4.3 No approximate run - command file MOIQ1CX.CMF [gpd3.16.4.3]

- 51.5 Other features of complementarity simulations [gpd3.16.5]

- 51.5.1 Closure and shocks [gpd3.16.5.1]

- 51.5.2 Running TABLO and simulations [gpd3.16.5.2]

- 51.5.3 Program reports state changes [gpd3.16.5.3]

- 51.5.4 Program checks states and bounds at end of run [gpd3.16.5.4]

- 51.5.5 Checking the simulation results [gpd3.16.5.5]

- 51.5.6 Omitting the accurate run [gpd3.16.5.6]

- 51.5.7 Subtotals when there are complementarities [gpd3.16.5.6a]

- 51.6 Command file statements for complementarity simulations [gpd3.16.6]

- 51.7 Technical details about complementarity simulations [gpd3.16.7]

- 51.7.1 How the closure and shocks change for the accurate simulation [gpd3.16.7.1]

- 51.7.2 Extra variables introduced with each complementarity [gpd3.16.7.2]

- 51.7.3 Step may be redone during approximate run [gpd3.16.7.3]

- 51.7.4 Several subintervals and automatic accuracy [gpd3.16.7.4]

- 51.7.5 Checks that the pre- and post-simulation states are accurate [gpd3.16.7.5]

- 51.8 Complementarity examples [gpd3.16.8]

- 51.8.1 Example: MOIQ (miniature ORANI with import quotas) [gpd3.16.8.1]

- 51.8.2 Example: MOTRQ (miniature ORANI with tariff-rate quotas) [gpd3.16.8.2]

- 51.8.3 Example: MOTR2 (alternative COMPLEMENTARITY statement) [gpd3.16.8.3]

- 51.8.4 Example: G5BTRQ (GTAP with bilateral tariff-rate quotas) [gpd3.16.8.4]

- 51.8.5 Alternative form of the complementarity - G5BTR2.TAB [gpd3.16.8.5]

- 51.8.6 Example: G5GTRQ (GTAP with global and bilateral trqs) [gpd3.16.8.6]

- 51.8.7 Example: G94-XQ (GTAP94 with bilateral export quotas) [gpd3.16.8.7]

- 51.8.8 Example: G94-IQ (GTAP94 with bilateral import quotas) [gpd3.16.8.8]

- 51.9 Piecewise linear functions via complementarities [gpd3.16.9]

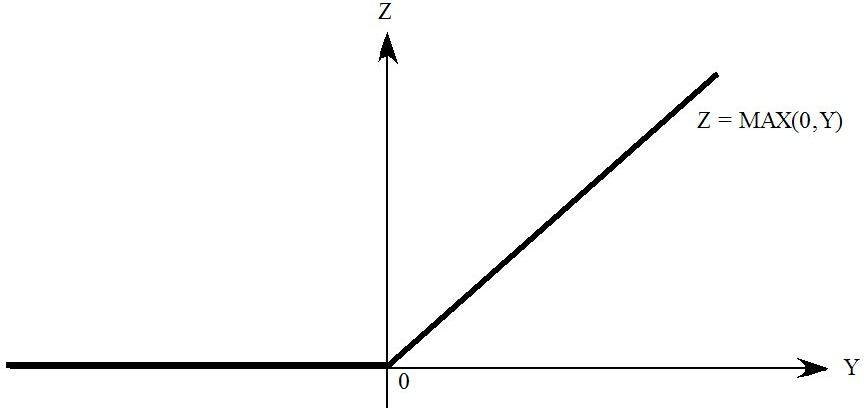

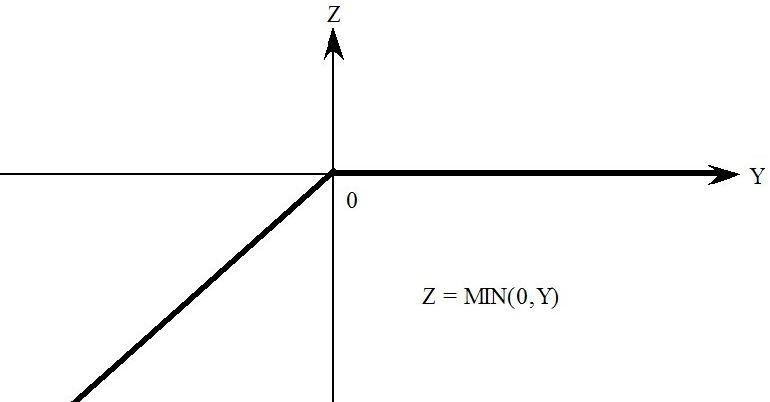

- 51.9.1 Max and min [gpd3.16.9.1]

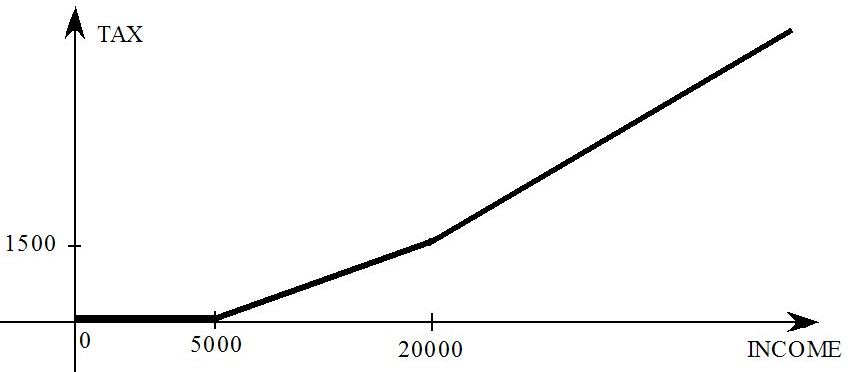

- 51.9.2 Progressive income tax schedule [gpd3.16.9.2]

- 51.9.3 Other piecewise linear functions [gpd3.16.9.3]

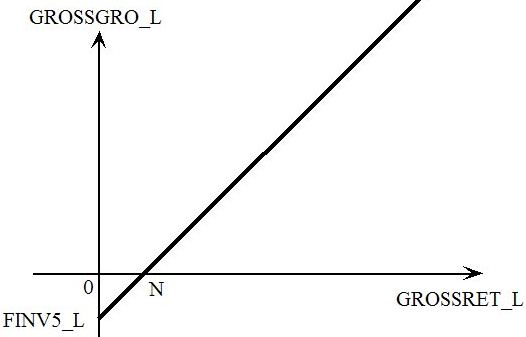

- 51.10 Example: ORANIGRD (ensuring investment stays non-negative) [gpd3.16.10]

- 51.10.1 OGRD1.TAB and negative investment [gpd3.16.10.1]

- 51.10.2 OGRD2.TAB - A complementarity to ensure non-negative investment [gpd3.16.10.2]

- 51.11 Complementarities - speeding up single Euler calculations [gpd9.7.18]

- 51.1 The basic ideas [gpd3.16.1]

- 52 Subtotals with complementarity statements [gpd5.7]

- 52.1 Telling the software when to calculate subtotals [gpd5.7.1]

- 52.2 Calculating subtotals on the approximate run - recommended [gpd5.7.2]

- 52.3 Calculating subtotals on the accurate run - not recommended [gpd5.7.3]

- 52.4 Examples: MOIQ3.CMF and related simulations [gpd5.7.4]

- 52.4.1 Example: MOIQ3AC.CMF simulation [gpd5.7.4.1]

- 52.4.2 Several subintervals [gpd5.7.4.2]

- 52.4.3 MOIQ3.CMF subtotals calculated during approximate run [gpd5.7.4.3]

- 52.4.4 MOIQ3AC.CMF subtotals calculated during accurate run (not recommended) [gpd5.7.4.4]

- 52.4.5 Subtotals from accurate run not robust [gpd5.7.4.5]

- 52.4.6 Subtotals from approximate run are robust [gpd5.7.4.6]

- 52.4.7 The initial closure is shown on solution files [gpd5.7.4.7]

- 52.5 Variables with no components exogenous allowed in subtotals [gpd5.7.5]

- 53 More examples of post-simulation processing [postsim2]

- 53.1 Examples -- summaries of the updated data [gpd5.2.1]

- 53.2 Examples -- post-simulation processing of variable results [gpd5.2.2]

- 53.2.1 GTAP -- table of important regional results [gpd5.2.2.1]

- 53.2.2 TERM -- table of important regional results [gpd5.2.2.2]

- 53.2.3 ORANIG03 -- tariff cut simulation results [gpd5.2.2.4]

- 53.3 Sophisticated post-simulation processing [gpd5.2.3]

- 53.3.1 GTAP -- sophisticated post-simulation processing in DECOMP.TAB [gpd5.2.3.1]

- 53.3.2 Example -- SJPS.TAB and SJPSLB.CMF [gpd5.2.8.1]

- 53.3.3 Other examples [gpd5.2.8.2]

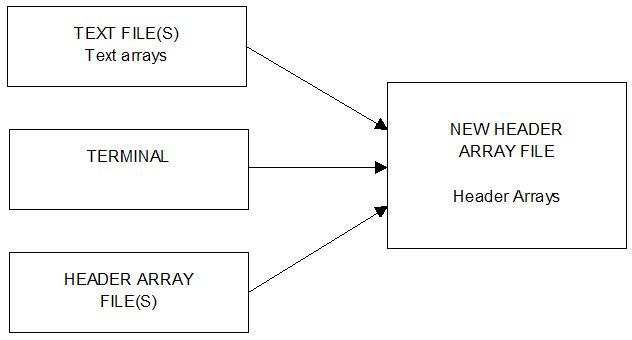

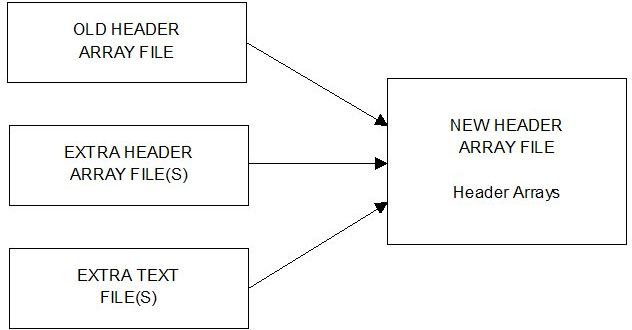

- 54 Using MODHAR to create or modify header array files [gpd4.3]

- 54.1 An overview of the use of MODHAR [gpd4.3.3]

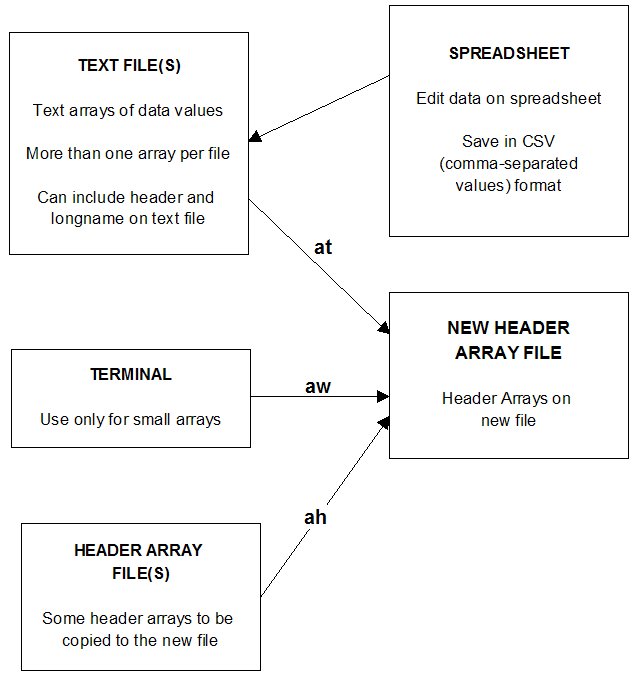

- 54.1.1 Creating a new header array file [gpd4.3.3.1]

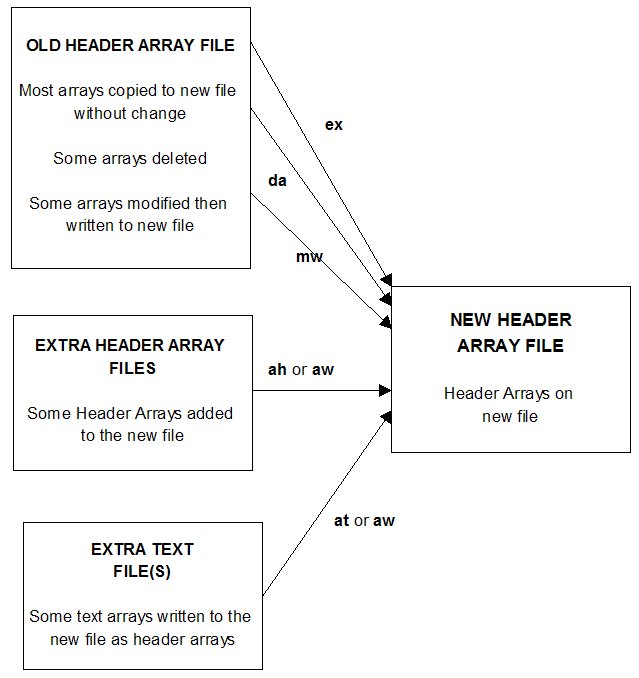

- 54.2 Modifying an existing header array file [gpd4.3.4]

- 54.2.1 Adding arrays from other files using MODHAR [gpd4.3.4.1]

- 54.3 Using MODHAR commands [gpd4.3.5]

- 54.4 Commands for creating a new header array file [gpd4.3.6]

- 54.4.1 Commands for modifying an existing header array file [gpd4.3.6.1]

- 54.4.2 Commands for adding arrays to the new file [gpd4.3.6.2]

- 54.5 Operations on headers or long names [gpd4.3.7]

- 54.6 Commands for complicated modification or addition [gpd4.3.8]

- 54.6.1 MODHAR sub-commands for mw or aw [gpd4.3.8.1]

- 54.6.2 Examples [gpd4.3.8.2]

- 54.6.3 Writing arrays to the new header array file [gpd4.3.8.3]

- 54.6.4 Option ADD when modifying data in MODHAR [gpd4.3.8.4]

- 54.7 Finishing up [gpd4.3.9]

- 54.7.1 Exit commands [gpd4.3.9.1]

- 54.7.2 History of the new file [gpd4.3.9.2]

- 54.8 Complete example of a MODHAR run [gpd4.3.10]

- 54.9 Text files [gpd4.3.11]

- 54.10 Example: Constructing the header array data file for Stylized Johansen [gpd1.4.2]

- 54.11 Example: Modifying data using MODHAR [gpd1.4.3]

- 54.1 An overview of the use of MODHAR [gpd4.3.3]

- 55 Ordering of variables and equations in solution and equation files [ordering]

- 55.1 Ordering of variables [gpd2.4.15.2]

- 55.1.1 Ordering of components of variables [gpd2.4.15.3]

- 55.2 Ordering of the equation blocks [gpd2.4.15.4]

- 55.1 Ordering of variables [gpd2.4.15.2]

- 56 SEENV: to see the closure on an environment file [gpd4.12]

- 56.1 Command file output [gpd4.12.1]

- 56.2 Spreadsheet output [gpd4.12.2]

- 56.3 Shock statements for the bottom of a command file [gpd4.12.3]

- 57 Automated homogeneity testing [autohomog]

- 57.1 What do we mean by nominal and real homogeneity [homotheory]

- 57.2 Specifying Types of Variables in TAB file [homotest1]

- 57.2.1 Ordinary change variables [homotestdelvar]

- 57.2.2 Levels variables [homotest1.0a]

- 57.2.3 Leaving some VPQ types unspecified is OK [homotest1.0b]

- 57.2.4 Domestic and foreign dollars [homotest1.1]

- 57.2.5 Sometimes, non-homogeneity does not matter [homotestdc]

- 57.2.6 Some variables must be of Unspecified type [homotest1.2]

- 57.3 Homogeneity check or simulation [homochoice]

- 57.4 Carrying out a homogeneity check [homocheck]

- 57.4.1 Interpreting the Homogeneity Report HAR file [homotest2]

- 57.4.2 Understanding equation summaries at header 0000 [homotest2.1]

- 57.4.3 Example [homotest2.1.1]

- 57.4.4 Fixing apparent problems [homotest2.2]

- 57.4.5 Best to work with the uncondensed system [homotest2.3]

- 57.5 Automated homogeneity simulations [aut-homosim]

- 57.5.1 Program works out the shocks [aut-homosim1]

- 57.5.2 Checking the simulation results [aut-homosim2]

- 57.6 Detailed example MOLN.TAB [moln-homo]

- 57.6.1 Suboptimalities in MOLN-R11-VPQ.TAB [moln-r11]

- 57.6.2 Homogeneity of MOLN.TAB [moln-homo1]

- 57.6.3 Homogeneity problems with MOLN-R11-VPQ.TAB [moln-homo2]

- 57.7 Detailed example ORANIG-VPQ.TAB [oranig-vpq]

- 58 Several simultaneous Johansen simulations via SAGEM [gpd3.10]

- 58.1 The solution matrix [gpd3.10.1]

- 58.1.1 Individual column results [gpd3.10.1.1]

- 58.1.2 Cumulative or row totals results [gpd3.10.1.2]

- 58.1.3 Subtotal solutions [gpd3.10.1.3]

- 58.1.4 Contents of a SAGEM solution file [gpd3.10.1.4]

- 58.1.5 Viewing the results of a simulation [gpd3.10.1.5]

- 58.2 SAGEM Subtotals [gpd3.10.2]

- 58.2.1 Subtotals using SAGEM [gpd3.10.2.1]

- 58.2.2 Subtotals and sets of shocks in general [gpd3.10.2.3]

- 58.2.3 Viewing subtotals or individual column results using ViewSOL [gpd3.10.2.5]

- 58.3 No individual column results from multi-step simulations [gpd3.10.3]

- 58.4 When to use SAGEM to calculate individual column results [whensagem]

- 58.1 The solution matrix [gpd3.10.1]

- 59 Equations files and LU files [gpd3.9]

- 59.1 Equations files [gpd3.9.1]

- 59.1.1 Using an equations file in SAGEM [gpd3.9.1.1]

- 59.1.2 Differences in step 1 if an equations file is saved [gpd3.9.1.2]

- 59.1.3 Model name, version and identifier [gpd3.9.1.3]

- 59.2 Starting from existing equations and SLC files [gpd9.7.22]

- 59.2.1 Restrictions [gpd9.7.22.1]

- 59.2.2 BCV files no longer produced or supported [gpd9.7.21]

- 59.3 LU files [gpd3.9.3]

- 59.1 Equations files [gpd3.9.1]

- 60 Example models supplied with GEMPACK [models]

- 60.1 Models usually supplied with GEMPACK [gpd8.1c]

- 60.2 Stylized Johansen SJ [gpd8.1.1]

- 60.3 Miniature ORANI MO [gpd8.1.2]

- 60.4 Trade model TRADMOD [gpd8.1.3]

- 60.5 ORANI-type single-country model ORANI-G [gpd8.1.4]

- 60.5.1 ORANIG01 model [gpd8.1.4.1]

- 60.5.2 ORANIG98 model [gpd8.1.4.2]

- 60.6 Single country model of Australia ORANI-F [gpd8.1.5]

- 60.7 Global trade analysis Project GTAP [gpd8.1.6]

- 60.7.1 GTAP61 model [gpd8.1.6.1]

- 60.7.2 GTAP94 model [gpd8.1.6.2]

- 60.8 Dervis, de Melo, Robinson model of Korea -- DMR [gpd8.1.7]

- 60.9 Intertemporal forestry model TREES [gpd8.1.8]

- 60.10 Single sector investment model CRTS [gpd8.1.9]

- 60.11 Five sector investment model 5SECT [gpd8.1.10]

- 60.12 Complementarity examples [gpd8.1.11]

- 60.13 ORANI-INT: an intertemporal rational expectations version of ORANI [gpd8.1.12]

- 60.14 ORANIG-RD : A recursive dynamic version of ORANI-G [gpd8.1.13]

- 61 Pivoting, memory-sharing, and other solution strategies [pivots]

- 61.1 Re-using pivots (MA48) [gpd9.5]

- 61.1.1 Advanced pivot re-use strategy [gpd9.5.1]

- 61.1.2 Examples [gpd9.5.1.1]

- 61.1.3 New command file statements for pivot re-use [gpd9.5.2]

- 61.1.4 Modifying pivots when new entries are in the diagonal blocks [gpd9.5.3]

- 61.1.5 Never modify pivots if a triangular block would become non-triangular [gpd9.5.4]

- 61.1.6 Start of second and subsequent subintervals [gpd9.5.5]

- 61.2 Time taken for the different steps [gpd3.12.4]

- 61.2.1 Total time for multi-step compared to 1-step [gpd3.12.4.1]

- 61.2.2 Using existing files to speed up step 1 [gpd3.12.4.2]

- 61.2.3 Memory reallocation now done within the MA48 routines [gpd5.5.2]

- 61.2.4 LU decomposing transpose of LHS may be much quicker [gpd5.5.3]

- 61.2.5 Alternative pivot strategy in MA48 [gpd5.5.4]

- 61.2.6 Linearization used can affect accuracy [gpd3.12.6.4]

- 61.2.7 Example of increasingly large oscillations [gpd3.12.6.5]

- 61.2.8 Scaling equations [gpd3.12.6.6]

- 61.3 When memory is short (GEMSIM or TG-programs) - Memory sharing [gpd5.4.2]

- 61.3.1 When memory sharing may be useful [gpd5.4.2.1]

- 61.3.2 Running in virtual memory is usually very slow [gpd5.4.2.2]